Estimating nitrogen concentrations in streams and rivers using NN

Antonio Fonseca

GeoComput & ML

May 20th, 2021

Exercise base on the:

Lecture: Artificial Neural Networks for geo-data

Introduction

The field of deep learning begins with the assumption that everything is a function, and leverages powerful tools like Gradient Descent to efficiently learn these functions. Although many deep learning tasks (like classification) require supervised learning (with labels, testing, and training sets), a rich subset of the field has developed potent methods for automated, non-linear unsupervised learning, in which all you need to provide is the data. These unsupervised methods include Autoencoders, Variational Autoencoders, and Generative Adversarial Networks. They can be used to visualize data or to compress it; to generate novel data, and even to learn the functions underlying your data. In this assignment, you’ll gain hands-on experience using simple networks to perform classification and regression on a variety of datasets, and will then apply these techniques to generate new samples using Variational Autoencoders and GANs.

This assignment will also serve as a hands-on introduction to PyTorch. At present, PyTorch is the single most popular machine learning library in Python. It provides a framework of pre-built classes and helper functions to greatly simplify the creation of neural networks and their paraphernalia. Before PyTorch and its ilk, machine learning researchers were known to spend days juggling weight and bias vectors, or tediously implementing their own data processing functions. With PyTorch, this takes minutes.

Before diving into this assignment, you’ll need to install PyTorch and some other important packages (see below). The PyTorch website provides an interactive quick-start guide to tailor the installation to your system’s configuration https://pytorch.org/get-started/locally/. (The installation instructions will ask you to install torchvision in addition to torch. Thankfully we have a VM prepared for you!)

Needed packages for this lecture:

conda install pandas

conda install -c conda-forge scikit-learn

conda install -c anaconda seaborn

conda install -c conda-forge tensorflow

conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch

conda install ipywidgets

BackGround

Geoenviornmental variables

Ground observationd : Nitrogen in US streams

[1]:

import numpy as np

import codecs

import os

import copy

import json

from scipy.spatial.distance import cdist, pdist, squareform

from scipy.linalg import eigh

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans

import random

from sklearn import manifold

import pandas as pd

from torch.nn import functional as F

import pandas as pd

from sklearn.metrics import r2_score

from sklearn.preprocessing import MinMaxScaler

import seaborn as sns

import torch

from torch.utils.data import Dataset, DataLoader

from torch.utils.data.sampler import SubsetRandomSampler,RandomSampler

from torchvision import datasets, transforms

from torch.nn.functional import softmax

from torch import optim, nn

import torchvision

import torchvision.transforms as transforms

import torchvision.datasets as datasets

import time

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

cpu

Loading data and Logistic Regression

[2]:

# Loading the dataset and create dataloaders

mnist_train = datasets.MNIST(root = 'data', train=True, download=True, transform = transforms.ToTensor())

mnist_test = datasets.MNIST(root = 'data', train=False, download=True, transform = transforms.ToTensor())

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 503: Service Unavailable

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz to data/MNIST/raw/train-images-idx3-ubyte.gz

Extracting data/MNIST/raw/train-images-idx3-ubyte.gz to data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 503: Service Unavailable

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz to data/MNIST/raw/train-labels-idx1-ubyte.gz

Extracting data/MNIST/raw/train-labels-idx1-ubyte.gz to data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 503: Service Unavailable

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz to data/MNIST/raw/t10k-images-idx3-ubyte.gz

Extracting data/MNIST/raw/t10k-images-idx3-ubyte.gz to data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 503: Service Unavailable

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz to data/MNIST/raw/t10k-labels-idx1-ubyte.gz

Extracting data/MNIST/raw/t10k-labels-idx1-ubyte.gz to data/MNIST/raw

Processing...

/home/user/miniconda3/lib/python3.8/site-packages/torchvision/datasets/mnist.py:502: UserWarning: The given NumPy array is not writeable, and PyTorch does not support non-writeable tensors. This means you can write to the underlying (supposedly non-writeable) NumPy array using the tensor. You may want to copy the array to protect its data or make it writeable before converting it to a tensor. This type of warning will be suppressed for the rest of this program. (Triggered internally at /opt/conda/conda-bld/pytorch_1616554788289/work/torch/csrc/utils/tensor_numpy.cpp:143.)

return torch.from_numpy(parsed.astype(m[2], copy=False)).view(*s)

Done!

[3]:

# Prepare the dataset

class MyDataset(Dataset):

def __init__(self, data, target):

self.data = torch.from_numpy(data).float()

self.target = torch.from_numpy(target).float()

def __getitem__(self, index):

x = self.data[index]

y = self.target[index]

return x, y

def __len__(self):

return len(self.data)

[1]:

def DataPreProcessing(verbose=False):

dataset = pd.read_csv("/media/sf_LVM_shared/my_SE_data/exercise/txt/US_TN_season_1_proc.csv")

#Check for NaN in this table and drop them if there are

dataset.isna().sum()

dataset.dropna()

# Remove extra variables from the dataset (keep just the 47 predictors and the 'bcmean' (what we are predicting)

dataset = dataset.drop(["RVunif_bc","mean","std","cv","longitude","latitude","RVunif"],axis=1)

if verbose:

print('Example of the dataset: \n',dataset.head())

dataset_orig = dataset.copy()

# Rescale: differences in scales accross input variables may increase the difficulty of the problem being modeled and results on unstable weights for connections

sc = MinMaxScaler(feature_range = (0,1)) #Scaling features to a range between 0 and 1

# Scaling and translating each feature to our chosen range

dataset = sc.fit_transform(dataset)

dataset = pd.DataFrame(dataset, columns = dataset_orig.columns)

if verbose:

print('dataset (after transform): \n',dataset.head())

dataset_scaled = dataset.copy() #Just backup

inverse_data = sc.inverse_transform(dataset) #just to make sure it works

inverse_data = pd.DataFrame(inverse_data, columns = dataset_orig.columns)

if verbose:

print('inverse_data: \n',inverse_data.head())

#Check the overall stats

train_stats = dataset.describe()

train_stats.pop('bcmean') #because that is what we are trying to predict

train_stats = train_stats.transpose()

if verbose: print('train_stats: ',train_stats) #now train_stats has 47 predictors (as described in the paper).

labels = dataset.pop('bcmean')

if verbose: print('labels.describe: ',labels.describe())

dataset = MyDataset(dataset.to_numpy(), labels.to_numpy())

dataset_size = len(dataset)

if verbose: print('dataset_size: {}'.format(dataset_size))

validation_split=0.3

batch_size=25 #How many samples are actually going to be selected

# -- split dataset

indices = list(range(dataset_size))

split = int(np.floor(validation_split*dataset_size))

if verbose: print('samples in validation: {}'.format(split))

np.random.shuffle(indices) # Randomizing the indices is not a good idea if you want to model the sequence

train_indices, val_indices = indices[split:], indices[:split]

# -- create dataloaders

train_sampler = RandomSampler(train_indices)

valid_sampler = RandomSampler(val_indices)

dataloaders = {

'train': torch.utils.data.DataLoader(dataset, batch_size=batch_size, num_workers=1, sampler=train_sampler),

'val': torch.utils.data.DataLoader(dataset, batch_size=batch_size, num_workers=1, sampler=valid_sampler),

'test': torch.utils.data.DataLoader(dataset, batch_size=dataset_size, num_workers=1, shuffle=False),

'all_val': torch.utils.data.DataLoader(dataset, batch_size=split, num_workers=1, shuffle=True),

}

if verbose:

#Inspect the original mean (still missing some formatting)

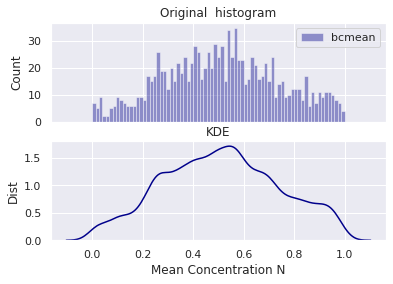

sns.set()

f, (ax1,ax2) = plt.subplots(2, 1,sharex=True)

sns.distplot(labels,hist=True,kde=False,bins=75,color='darkblue', ax=ax1, axlabel=False)

sns.kdeplot(labels,bw=0.15,legend=True,color='darkblue', ax=ax2)

ax1.set_title('Original histogram')

ax1.legend(['bcmean'])

ax2.set_title('KDE')

ax2.set_xlabel('Mean Concentration N')

ax1.set_ylabel('Count')

ax2.set_ylabel('Dist')

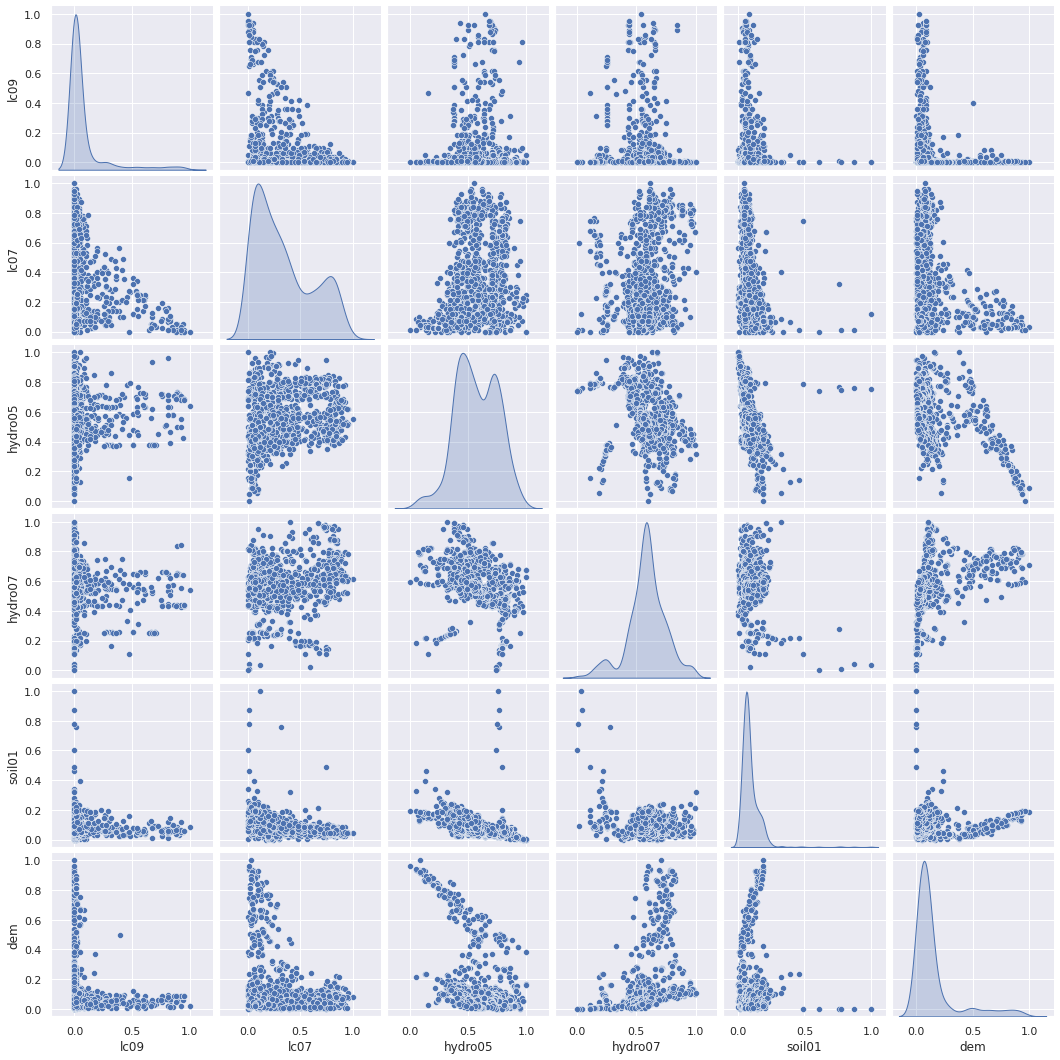

# Inspect the joint distribution of a few pairs of columns from the training set

# We can observe that the process of scalling the data did not affect the skewness of the data

if verbose:

sns.pairplot(dataset_scaled[["lc09", "lc07", "hydro05", "hydro07","soil01","dem"]], diag_kind="kde")

plt.show()

return dataloaders

[6]:

dataloaders = DataPreProcessing(verbose=True)

#Check out how the dataloeader works

dataiter = iter(dataloaders['val'])

samples_tmp, labels_tmp = dataiter.next()

print('Dim of raw sample image: {}\n'.format(samples_tmp.shape))

print('Dim of labels: {}\n'.format(labels_tmp.shape))

print('max {}, min {}'.format(torch.max(samples_tmp),torch.min(samples_tmp)))

print('max_labels {}, min {}'.format(torch.max(labels_tmp),torch.min(labels_tmp)))

Example of the dataset:

lc01 lc02 lc03 lc04 lc05 lc06 lc07 lc08 lc09 lc10 ... hydro14 \

0 66 0 1 33 0 0 0 0 0 0 ... 4418

1 53 0 2 43 0 0 0 0 0 0 ... 3710

2 28 0 15 45 23 22 12 0 0 0 ... 38819396

3 59 0 2 33 0 0 6 0 0 0 ... 7735

4 52 0 2 43 0 0 1 0 0 0 ... 3999

hydro15 hydro16 hydro17 hydro18 hydro19 dem slope order \

0 68 182937 21313 182937 21313 498 407 2

1 68 155202 18107 155202 18107 365 402 2

2 29 310689856 136347296 310689856 136347296 1470 492 8

3 67 302169 37266 302169 37266 342 467 2

4 68 167904 19575 167904 19575 341 391 2

bcmean

0 -1.435592

1 -1.070739

2 -0.474586

3 -0.747083

4 -0.589795

[5 rows x 48 columns]

dataset (after transform):

lc01 lc02 lc03 lc04 lc05 lc06 lc07 lc08 lc09 \

0 0.880000 0.0 0.010204 0.478261 0.0000 0.00000 0.000000 0.0 0.0

1 0.706667 0.0 0.020408 0.623188 0.0000 0.00000 0.000000 0.0 0.0

2 0.373333 0.0 0.153061 0.652174 0.2875 0.30137 0.127660 0.0 0.0

3 0.786667 0.0 0.020408 0.478261 0.0000 0.00000 0.063830 0.0 0.0

4 0.693333 0.0 0.020408 0.623188 0.0000 0.00000 0.010638 0.0 0.0

lc10 ... hydro14 hydro15 hydro16 hydro17 hydro18 hydro19 \

0 0.0 ... 0.000024 0.759036 0.000140 0.000035 0.000140 0.000035

1 0.0 ... 0.000020 0.759036 0.000118 0.000030 0.000118 0.000030

2 0.0 ... 0.209210 0.289157 0.237482 0.225111 0.237482 0.225111

3 0.0 ... 0.000042 0.746988 0.000231 0.000061 0.000231 0.000061

4 0.0 ... 0.000021 0.759036 0.000128 0.000032 0.000128 0.000032

dem slope order bcmean

0 0.143854 0.374539 0.25 0.028016

1 0.105202 0.369926 0.25 0.130145

2 0.426330 0.452952 1.00 0.297018

3 0.098518 0.429889 0.25 0.220742

4 0.098227 0.359779 0.25 0.264769

[5 rows x 48 columns]

inverse_data:

lc01 lc02 lc03 lc04 lc05 lc06 lc07 lc08 lc09 lc10 ... \

0 66.0 0.0 1.0 33.0 0.0 0.0 0.0 0.0 0.0 0.0 ...

1 53.0 0.0 2.0 43.0 0.0 0.0 0.0 0.0 0.0 0.0 ...

2 28.0 0.0 15.0 45.0 23.0 22.0 12.0 0.0 0.0 0.0 ...

3 59.0 0.0 2.0 33.0 0.0 0.0 6.0 0.0 0.0 0.0 ...

4 52.0 0.0 2.0 43.0 0.0 0.0 1.0 0.0 0.0 0.0 ...

hydro14 hydro15 hydro16 hydro17 hydro18 hydro19 \

0 4418.0 68.0 182937.0 21313.0 182937.0 21313.0

1 3710.0 68.0 155202.0 18107.0 155202.0 18107.0

2 38819396.0 29.0 310689856.0 136347296.0 310689856.0 136347296.0

3 7735.0 67.0 302169.0 37266.0 302169.0 37266.0

4 3999.0 68.0 167904.0 19575.0 167904.0 19575.0

dem slope order bcmean

0 498.0 407.0 2.0 -1.435592

1 365.0 402.0 2.0 -1.070739

2 1470.0 492.0 8.0 -0.474586

3 342.0 467.0 2.0 -0.747083

4 341.0 391.0 2.0 -0.589795

[5 rows x 48 columns]

train_stats: count mean std min 25% 50% 75% max

lc01 1118.0 0.137448 0.193109 0.0 0.013333 0.053333 0.173333 1.0

lc02 1118.0 0.008497 0.057215 0.0 0.000000 0.000000 0.000000 1.0

lc03 1118.0 0.204839 0.247051 0.0 0.020408 0.081633 0.364796 1.0

lc04 1118.0 0.220464 0.175918 0.0 0.101449 0.159420 0.275362 1.0

lc05 1118.0 0.033777 0.107309 0.0 0.000000 0.000000 0.012500 1.0

lc06 1118.0 0.149607 0.162242 0.0 0.041096 0.095890 0.191781 1.0

lc07 1118.0 0.339512 0.271629 0.0 0.106383 0.265957 0.542553 1.0

lc08 1118.0 0.005134 0.057887 0.0 0.000000 0.000000 0.000000 1.0

lc09 1118.0 0.080954 0.188890 0.0 0.000000 0.012048 0.048193 1.0

lc10 1118.0 0.004584 0.054904 0.0 0.000000 0.000000 0.000000 1.0

lc11 1118.0 0.014183 0.067649 0.0 0.000000 0.000000 0.000000 1.0

lc12 1118.0 0.018244 0.069379 0.0 0.000000 0.000000 0.015873 1.0

prec 1118.0 0.010144 0.066673 0.0 0.000039 0.000184 0.001226 1.0

tmin 1118.0 0.497205 0.158301 0.0 0.400538 0.492608 0.586022 1.0

tmax 1118.0 0.528114 0.172596 0.0 0.405479 0.526027 0.642123 1.0

soil01 1118.0 0.090513 0.072919 0.0 0.053125 0.071875 0.112500 1.0

soil02 1118.0 0.492454 0.187220 0.0 0.358974 0.461538 0.615385 1.0

soil03 1118.0 0.517907 0.172268 0.0 0.431373 0.509804 0.588235 1.0

soil04 1118.0 0.493104 0.173381 0.0 0.387097 0.483871 0.580645 1.0

soil05 1118.0 0.383697 0.128425 0.0 0.331081 0.405405 0.459459 1.0

soil06 1118.0 0.211986 0.160954 0.0 0.114286 0.171429 0.257143 1.0

soil07 1118.0 0.289927 0.159142 0.0 0.138889 0.291667 0.416667 1.0

soil08 1118.0 0.701262 0.114678 0.0 0.656499 0.718833 0.773210 1.0

soil09 1118.0 0.978158 0.090370 0.0 1.000000 1.000000 1.000000 1.0

soil10 1118.0 0.210858 0.122884 0.0 0.130435 0.217391 0.260870 1.0

hydro01 1118.0 0.448534 0.193156 0.0 0.320513 0.431624 0.585470 1.0

hydro02 1118.0 0.396587 0.152295 0.0 0.290598 0.358974 0.435897 1.0

hydro03 1118.0 0.400296 0.187627 0.0 0.281250 0.375000 0.531250 1.0

hydro04 1118.0 0.550702 0.166883 0.0 0.467990 0.567450 0.618271 1.0

hydro05 1118.0 0.570012 0.182000 0.0 0.433333 0.560000 0.718333 1.0

hydro06 1118.0 0.447054 0.170048 0.0 0.346204 0.439791 0.562173 1.0

hydro07 1118.0 0.584048 0.161894 0.0 0.509434 0.586478 0.672956 1.0

hydro08 1118.0 0.650470 0.212953 0.0 0.537500 0.712500 0.790625 1.0

hydro09 1118.0 0.471208 0.243332 0.0 0.294964 0.386091 0.740408 1.0

hydro10 1118.0 0.593690 0.191173 0.0 0.469613 0.588398 0.745856 1.0

hydro11 1118.0 0.434005 0.176386 0.0 0.320917 0.418338 0.564470 1.0

hydro12 1118.0 0.011632 0.073962 0.0 0.000046 0.000223 0.001267 1.0

hydro13 1118.0 0.012683 0.077900 0.0 0.000042 0.000218 0.001422 1.0

hydro14 1118.0 0.010287 0.069454 0.0 0.000042 0.000209 0.001256 1.0

hydro15 1118.0 0.258196 0.216782 0.0 0.096386 0.168675 0.385542 1.0

hydro16 1118.0 0.012388 0.077245 0.0 0.000041 0.000211 0.001403 1.0

hydro17 1118.0 0.010475 0.069597 0.0 0.000047 0.000219 0.001358 1.0

hydro18 1118.0 0.012388 0.077245 0.0 0.000041 0.000211 0.001403 1.0

hydro19 1118.0 0.010475 0.069597 0.0 0.000047 0.000219 0.001358 1.0

dem 1118.0 0.155945 0.206628 0.0 0.047733 0.085731 0.140294 1.0

slope 1118.0 0.139799 0.166496 0.0 0.035978 0.071033 0.174354 1.0

order 1118.0 0.338327 0.229210 0.0 0.125000 0.375000 0.500000 1.0

labels.describe: count 1118.000000

mean 0.511352

std 0.229915

min 0.000000

25% 0.341402

50% 0.509617

75% 0.676309

max 1.000000

Name: bcmean, dtype: float64

dataset_size: 1118

samples in validation: 335

/home/user/miniconda3/lib/python3.8/site-packages/seaborn/distributions.py:2551: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms).

warnings.warn(msg, FutureWarning)

/home/user/miniconda3/lib/python3.8/site-packages/seaborn/distributions.py:1659: FutureWarning: The `bw` parameter is deprecated in favor of `bw_method` and `bw_adjust`. Using 0.15 for `bw_method`, but please see the docs for the new parameters and update your code.

warnings.warn(msg, FutureWarning)

Dim of raw sample image: torch.Size([25, 47])

Dim of labels: torch.Size([25])

max 1.0, min 0.0

max_labels 0.9762871265411377, min 0.10735689848661423

[7]:

# Training and Evaluation routines

def train(model,loss_fn, optimizer, train_loader, test_loader, config_str, num_epochs=None, verbose=False):

"""

This is a standard training loop, which leaves some parts to be filled in.

INPUT:

:param model: an untrained pytorch model

:param loss_fn: e.g. Cross Entropy loss of Mean Squared Error.

:param optimizer: the model optimizer, initialized with a learning rate.

:param training_set: The training data, in a dataloader for easy iteration.

:param test_loader: The testing data, in a dataloader for easy iteration.

"""

path_to_save = './plots'

if not os.path.exists(path_to_save):

os.makedirs(path_to_save)

print('optimizer: {}'.format(optimizer))

if num_epochs is None:

num_epochs = 100

print('n. of epochs: {}'.format(num_epochs))

train_loss=[]

val_loss=[]

r2train=[]

r2val=[]

for epoch in range(num_epochs+1):

# loop through each data point in the training set

all_loss_train=0

for data, targets in train_loader:

start = time.time()

# run the model on the data

model_input = data.view(data.size(0),-1).to(device)# TODO: Turn the 28 by 28 image tensors into a 784 dimensional tensor.

if verbose:

print('model_input.shape: {}'.format(model_input.shape))

print('model_input: {}'.format(model_input))

# Clear gradients w.r.t. parameters

optimizer.zero_grad()

out = model(model_input).squeeze()

if verbose:

print('targets: {}'.format(targets.shape))

print('out: {}'.format(out.shape))

# Calculate the loss

targets = targets.to(device) # add an extra dimension to keep CrossEntropy happy.

if verbose: print('targets.shape: {}'.format(targets.shape))

loss = loss_fn(out,targets)

if verbose: print('loss: {}'.format(loss))

# Find the gradients of our loss via backpropogation

loss.backward()

# Adjust accordingly with the optimizer

optimizer.step()

all_loss_train += loss.item()

train_loss.append(all_loss_train/len(train_loader))

with torch.no_grad():

all_loss_val=0

for data, targets in test_loader:

# run the model on the data

model_input = data.view(data.size(0),-1).to(device)# TODO: Turn the 28 by 28 image tensors into a 784 dimensional tensor.

out = model(model_input).squeeze()

targets = targets.to(device) # add an extra dimension to keep CrossEntropy happy.

loss = loss_fn(out,targets)

all_loss_val += loss.item()

val_loss.append(all_loss_val/len(test_loader))

# Give status reports every 100 epochs

if epoch % 10==0:

print(f" EPOCH {epoch}. Progress: {epoch/num_epochs*100}%. ")

r2train.append(evaluate(model,train_loader,verbose))

r2val.append(evaluate(model,test_loader,verbose))

print(" Training R^2: {:.4f}. Test R^2: {:.4f}. Loss Train: {:.4f}. Loss Val: {:.4f}. Time: {:.4f}".format(r2train[-1], r2val[-1],

train_loss[-1], val_loss[-1], 10*(time.time() - start))) #TODO: implement the evaluate function to provide performance statistics during training.

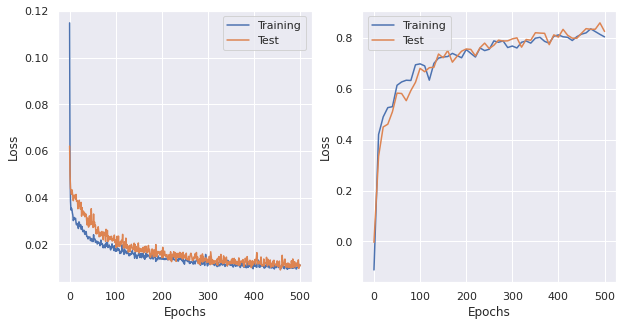

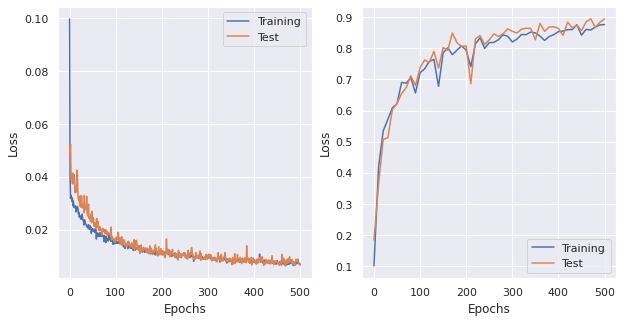

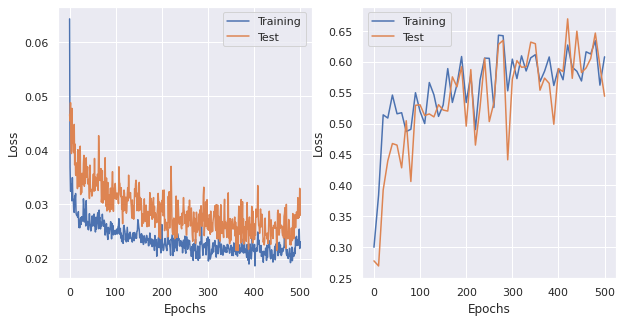

# Plot

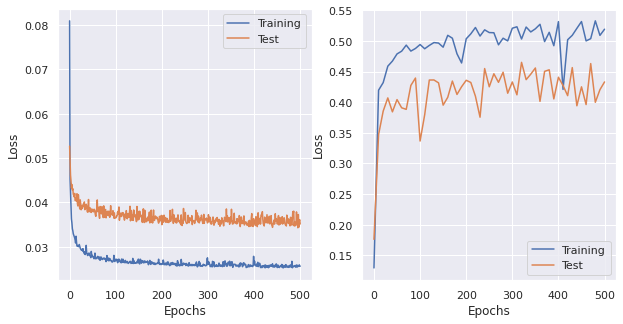

plt.close('all')

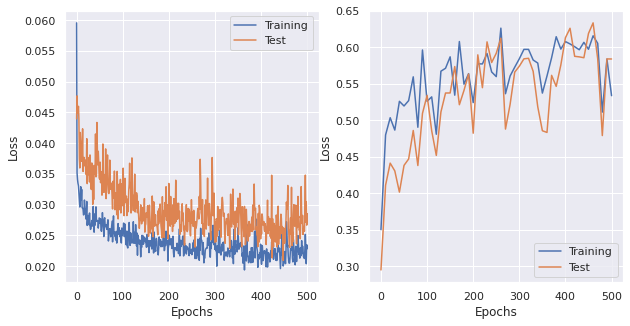

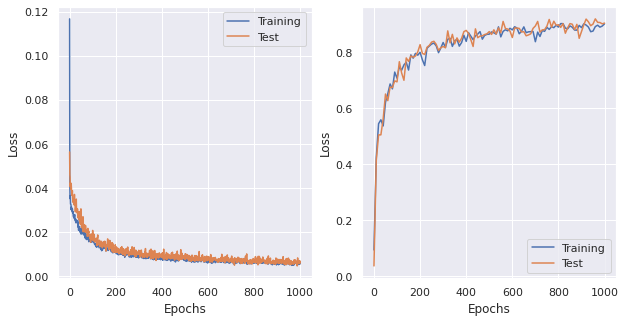

fig,ax = plt.subplots(1,2,figsize=(10,5))

ax[0].plot(np.arange(num_epochs+1),train_loss, label='Training')

ax[0].plot(np.arange(num_epochs+1),val_loss, label='Test')

ax[0].set_xlabel('Epochs')

ax[0].set_ylabel('Loss')

ax[0].legend()

ax[1].plot(np.arange(0,num_epochs+1,10),r2train, label='Training')

ax[1].plot(np.arange(0,num_epochs+1,10),r2val, label='Test')

ax[1].set_xlabel('Epochs')

ax[1].set_ylabel('Loss')

ax[1].legend()

plt.show()

print('saving ', os.path.join(path_to_save, config_str + '.png'))

fig.savefig(os.path.join(path_to_save, config_str + '.png'), bbox_inches='tight')

def evaluate(model, evaluation_set, verbose=False):

"""

Evaluates the given model on the given dataset.

Returns the percentage of correct classifications out of total classifications.

"""

with torch.no_grad(): # this disables backpropogation, which makes the model run much more quickly.

r_score = []

for data, targets in evaluation_set:

# run the model on the data

model_input = data.view(data.size(0),-1).to(device) #Turn the 28 by 28 image tensors into a 784 dimensional tensor.

if verbose:

print('model_input.shape: {}'.format(model_input.shape))

print('targets.shape: {}'.format(targets.shape))

predicted = model(model_input).squeeze()

if verbose:

print('predicted[:5]: {}'.format(predicted[:5].cpu()))

print('targets[:5]: {}'.format(targets[:5]))

r_score.append(r2_score(targets, predicted.cpu()))

if verbose: print('r2_score(targets, out): ',r2_score(targets, predicted.cpu()))

r_score = np.array(r_score)

r_score = r_score.mean()

return r_score

[8]:

# Testing a regular FFnet

class LogisticRegression(nn.Module):

def __init__(self, in_dim,out_dim,verbose=False):

super(LogisticRegression, self).__init__()

# Linear function

self.fc1 = nn.Linear(in_dim, out_dim)

torch.nn.init.zeros_(self.fc1.weight)

torch.nn.init.zeros_(self.fc1.bias)

def forward(self, x):

# Linear function

out = self.fc1(x)

return out

[10]:

lr_range = [0.01, 0.001,0.0001]

weight_decay_range = [0,0.1,0.01,0.001]

momentum_range = [0,0.1,0.01,0.001]

dampening_range = [0,0.1,0.01,0.001]

nesterov_range = [False]

for lr in lr_range:

for momentum in momentum_range:

for weight_decay in weight_decay_range:

for nesterov in nesterov_range:

for dampening in dampening_range:

print('\nlr: {}, momentum: {}, weight_decay: {}, dampening: {}, nesterov: {} '.format(lr, momentum, weight_decay, dampening, nesterov))

model = LogisticRegression(in_dim=47,out_dim=1, verbose=False).to(device)

print(model)

SGD = torch.optim.SGD(model.parameters(), lr = lr, momentum=momentum, dampening=dampening, weight_decay=weight_decay, nesterov=nesterov) # This is absurdly high.

# initialize the loss function. You don't want to use this one, so change it accordingly

loss_fn = nn.MSELoss()

config_str = 'lr' + str(lr) + '_momentum' + str(momentum) + '_wdecay' + str(weight_decay) + '_dampening' + str(dampening) +'_nesterov' + str(nesterov)

train(model,loss_fn, SGD, dataloaders['train'], dataloaders['val'], config_str,num_epochs=100, verbose=False)

[17]:

# Best configuration for longer

lr_range = [0.01]

weight_decay_range = [0.001]

momentum_range = [0.1,0.9]

dampening_range = [0]

nesterov_range = [False]

for lr in lr_range:

for momentum in momentum_range:

for weight_decay in weight_decay_range:

for nesterov in nesterov_range:

for dampening in dampening_range:

try:

print('\nlr: {}, momentum: {}, weight_decay: {}, dampening: {}, nesterov: {} '.format(lr, momentum, weight_decay, dampening, nesterov))

model = LogisticRegression(in_dim=47,out_dim=1, verbose=False).to(device)

print(model)

SGD = torch.optim.SGD(model.parameters(), lr = lr, momentum=momentum, dampening=dampening, weight_decay=weight_decay, nesterov=nesterov) # This is absurdly high.

# initialize the loss function. You don't want to use this one, so change it accordingly

loss_fn = nn.MSELoss()

config_str = 'lr' + str(lr) + 'LONGER_momentum' + str(momentum) + '_wdecay' + str(weight_decay) + '_dampening' + str(dampening) +'_nesterov' + str(nesterov)

train(model,loss_fn, SGD, dataloaders['train'], dataloaders['val'], config_str,num_epochs=500, verbose=False)

except:

pass

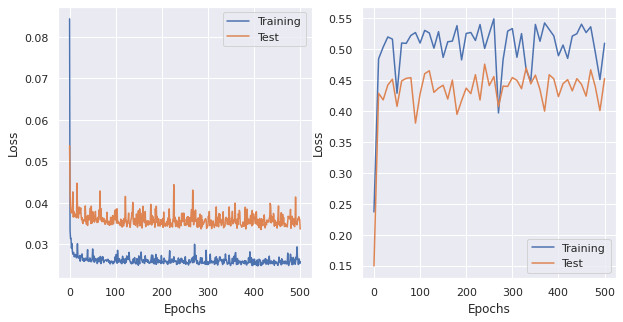

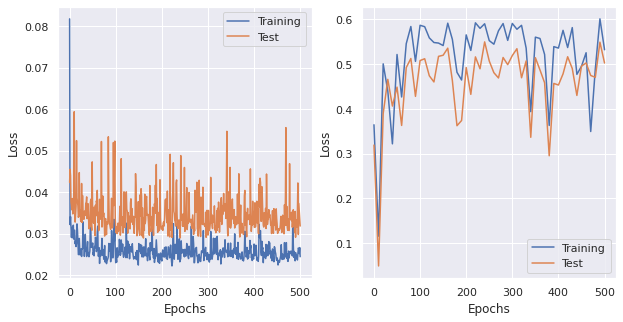

lr: 0.01, momentum: 0.1, weight_decay: 0.001, dampening: 0, nesterov: False

LogisticRegression(

(fc1): Linear(in_features=47, out_features=1, bias=True)

)

optimizer: SGD (

Parameter Group 0

dampening: 0

lr: 0.01

momentum: 0.1

nesterov: False

weight_decay: 0.001

)

n. of epochs: 500

EPOCH 0. Progress: 0.0%.

Training R^2: 0.1292. Test R^2: 0.1760. Loss Train: 0.0809. Loss Val: 0.0527. Time: 5.9460

EPOCH 10. Progress: 2.0%.

Training R^2: 0.4196. Test R^2: 0.3470. Loss Train: 0.0322. Loss Val: 0.0412. Time: 2.5306

EPOCH 20. Progress: 4.0%.

Training R^2: 0.4320. Test R^2: 0.3854. Loss Train: 0.0303. Loss Val: 0.0404. Time: 2.1711

EPOCH 30. Progress: 6.0%.

Training R^2: 0.4587. Test R^2: 0.4069. Loss Train: 0.0289. Loss Val: 0.0396. Time: 3.1840

EPOCH 40. Progress: 8.0%.

Training R^2: 0.4671. Test R^2: 0.3842. Loss Train: 0.0282. Loss Val: 0.0383. Time: 2.2062

EPOCH 50. Progress: 10.0%.

Training R^2: 0.4788. Test R^2: 0.4042. Loss Train: 0.0279. Loss Val: 0.0382. Time: 2.1512

EPOCH 60. Progress: 12.0%.

Training R^2: 0.4832. Test R^2: 0.3908. Loss Train: 0.0277. Loss Val: 0.0405. Time: 2.3680

EPOCH 70. Progress: 14.000000000000002%.

Training R^2: 0.4932. Test R^2: 0.3883. Loss Train: 0.0272. Loss Val: 0.0390. Time: 2.0704

EPOCH 80. Progress: 16.0%.

Training R^2: 0.4834. Test R^2: 0.4274. Loss Train: 0.0272. Loss Val: 0.0373. Time: 2.1498

EPOCH 90. Progress: 18.0%.

Training R^2: 0.4877. Test R^2: 0.4394. Loss Train: 0.0268. Loss Val: 0.0375. Time: 2.1138

EPOCH 100. Progress: 20.0%.

Training R^2: 0.4941. Test R^2: 0.3365. Loss Train: 0.0270. Loss Val: 0.0365. Time: 2.3166

EPOCH 110. Progress: 22.0%.

Training R^2: 0.4872. Test R^2: 0.3795. Loss Train: 0.0267. Loss Val: 0.0376. Time: 2.1446

EPOCH 120. Progress: 24.0%.

Training R^2: 0.4927. Test R^2: 0.4362. Loss Train: 0.0264. Loss Val: 0.0374. Time: 2.8796

EPOCH 130. Progress: 26.0%.

Training R^2: 0.4973. Test R^2: 0.4363. Loss Train: 0.0267. Loss Val: 0.0379. Time: 3.0462

EPOCH 140. Progress: 28.000000000000004%.

Training R^2: 0.4965. Test R^2: 0.4315. Loss Train: 0.0267. Loss Val: 0.0377. Time: 2.1279

EPOCH 150. Progress: 30.0%.

Training R^2: 0.4898. Test R^2: 0.3951. Loss Train: 0.0267. Loss Val: 0.0361. Time: 2.0948

EPOCH 160. Progress: 32.0%.

Training R^2: 0.5092. Test R^2: 0.4080. Loss Train: 0.0267. Loss Val: 0.0386. Time: 2.1599

EPOCH 170. Progress: 34.0%.

Training R^2: 0.5045. Test R^2: 0.4345. Loss Train: 0.0263. Loss Val: 0.0363. Time: 2.7793

EPOCH 180. Progress: 36.0%.

Training R^2: 0.4790. Test R^2: 0.4126. Loss Train: 0.0262. Loss Val: 0.0354. Time: 2.1298

EPOCH 190. Progress: 38.0%.

Training R^2: 0.4640. Test R^2: 0.4252. Loss Train: 0.0262. Loss Val: 0.0364. Time: 2.1042

EPOCH 200. Progress: 40.0%.

Training R^2: 0.5035. Test R^2: 0.4357. Loss Train: 0.0261. Loss Val: 0.0350. Time: 2.1428

EPOCH 210. Progress: 42.0%.

Training R^2: 0.5116. Test R^2: 0.4321. Loss Train: 0.0259. Loss Val: 0.0359. Time: 2.1280

EPOCH 220. Progress: 44.0%.

Training R^2: 0.5220. Test R^2: 0.4098. Loss Train: 0.0263. Loss Val: 0.0364. Time: 2.0515

EPOCH 230. Progress: 46.0%.

Training R^2: 0.5080. Test R^2: 0.3754. Loss Train: 0.0262. Loss Val: 0.0354. Time: 2.1203

EPOCH 240. Progress: 48.0%.

Training R^2: 0.5181. Test R^2: 0.4549. Loss Train: 0.0259. Loss Val: 0.0357. Time: 2.0732

EPOCH 250. Progress: 50.0%.

Training R^2: 0.5137. Test R^2: 0.4251. Loss Train: 0.0260. Loss Val: 0.0371. Time: 2.1298

EPOCH 260. Progress: 52.0%.

Training R^2: 0.5134. Test R^2: 0.4464. Loss Train: 0.0261. Loss Val: 0.0360. Time: 2.3224

EPOCH 270. Progress: 54.0%.

Training R^2: 0.4937. Test R^2: 0.4324. Loss Train: 0.0257. Loss Val: 0.0357. Time: 2.0788

EPOCH 280. Progress: 56.00000000000001%.

Training R^2: 0.5045. Test R^2: 0.4489. Loss Train: 0.0257. Loss Val: 0.0367. Time: 2.2380

EPOCH 290. Progress: 57.99999999999999%.

Training R^2: 0.5001. Test R^2: 0.4146. Loss Train: 0.0260. Loss Val: 0.0359. Time: 2.1239

EPOCH 300. Progress: 60.0%.

Training R^2: 0.5207. Test R^2: 0.4330. Loss Train: 0.0260. Loss Val: 0.0355. Time: 2.6492

EPOCH 310. Progress: 62.0%.

Training R^2: 0.5231. Test R^2: 0.4120. Loss Train: 0.0267. Loss Val: 0.0363. Time: 2.3547

EPOCH 320. Progress: 64.0%.

Training R^2: 0.5032. Test R^2: 0.4650. Loss Train: 0.0257. Loss Val: 0.0366. Time: 2.1155

EPOCH 330. Progress: 66.0%.

Training R^2: 0.5227. Test R^2: 0.4368. Loss Train: 0.0259. Loss Val: 0.0365. Time: 2.0535

EPOCH 340. Progress: 68.0%.

Training R^2: 0.5147. Test R^2: 0.4457. Loss Train: 0.0262. Loss Val: 0.0385. Time: 2.6143

EPOCH 350. Progress: 70.0%.

Training R^2: 0.5198. Test R^2: 0.4558. Loss Train: 0.0260. Loss Val: 0.0356. Time: 3.3361

EPOCH 360. Progress: 72.0%.

Training R^2: 0.5272. Test R^2: 0.4013. Loss Train: 0.0259. Loss Val: 0.0356. Time: 2.0422

EPOCH 370. Progress: 74.0%.

Training R^2: 0.4989. Test R^2: 0.4503. Loss Train: 0.0263. Loss Val: 0.0345. Time: 2.2167

EPOCH 380. Progress: 76.0%.

Training R^2: 0.5141. Test R^2: 0.4529. Loss Train: 0.0261. Loss Val: 0.0352. Time: 2.3632

EPOCH 390. Progress: 78.0%.

Training R^2: 0.4922. Test R^2: 0.4053. Loss Train: 0.0258. Loss Val: 0.0360. Time: 3.0163

EPOCH 400. Progress: 80.0%.

Training R^2: 0.5315. Test R^2: 0.4407. Loss Train: 0.0259. Loss Val: 0.0352. Time: 2.4278

EPOCH 410. Progress: 82.0%.

Training R^2: 0.4209. Test R^2: 0.4272. Loss Train: 0.0257. Loss Val: 0.0351. Time: 2.7372

EPOCH 420. Progress: 84.0%.

Training R^2: 0.5020. Test R^2: 0.4106. Loss Train: 0.0256. Loss Val: 0.0351. Time: 2.3255

EPOCH 430. Progress: 86.0%.

Training R^2: 0.5092. Test R^2: 0.4564. Loss Train: 0.0256. Loss Val: 0.0353. Time: 2.3361

EPOCH 440. Progress: 88.0%.

Training R^2: 0.5206. Test R^2: 0.3941. Loss Train: 0.0262. Loss Val: 0.0344. Time: 2.4134

EPOCH 450. Progress: 90.0%.

Training R^2: 0.5316. Test R^2: 0.4250. Loss Train: 0.0253. Loss Val: 0.0352. Time: 2.3447

EPOCH 460. Progress: 92.0%.

Training R^2: 0.5000. Test R^2: 0.3961. Loss Train: 0.0260. Loss Val: 0.0354. Time: 2.1631

EPOCH 470. Progress: 94.0%.

Training R^2: 0.5037. Test R^2: 0.4630. Loss Train: 0.0254. Loss Val: 0.0347. Time: 2.8979

EPOCH 480. Progress: 96.0%.

Training R^2: 0.5328. Test R^2: 0.3998. Loss Train: 0.0255. Loss Val: 0.0366. Time: 2.0738

EPOCH 490. Progress: 98.0%.

Training R^2: 0.5091. Test R^2: 0.4204. Loss Train: 0.0254. Loss Val: 0.0349. Time: 1.9961

EPOCH 500. Progress: 100.0%.

Training R^2: 0.5191. Test R^2: 0.4330. Loss Train: 0.0256. Loss Val: 0.0351. Time: 3.1330

saving ./plots/lr0.01LONGER_momentum0.1_wdecay0.001_dampening0_nesterovFalse.png

lr: 0.01, momentum: 0.9, weight_decay: 0.001, dampening: 0, nesterov: False

LogisticRegression(

(fc1): Linear(in_features=47, out_features=1, bias=True)

)

optimizer: SGD (

Parameter Group 0

dampening: 0

lr: 0.01

momentum: 0.9

nesterov: False

weight_decay: 0.001

)

n. of epochs: 500

EPOCH 0. Progress: 0.0%.

Training R^2: 0.2370. Test R^2: 0.1497. Loss Train: 0.0843. Loss Val: 0.0538. Time: 3.2603

EPOCH 10. Progress: 2.0%.

Training R^2: 0.4846. Test R^2: 0.4289. Loss Train: 0.0270. Loss Val: 0.0367. Time: 2.6736

EPOCH 20. Progress: 4.0%.

Training R^2: 0.5036. Test R^2: 0.4182. Loss Train: 0.0268. Loss Val: 0.0379. Time: 2.7447

EPOCH 30. Progress: 6.0%.

Training R^2: 0.5200. Test R^2: 0.4422. Loss Train: 0.0266. Loss Val: 0.0359. Time: 2.2237

EPOCH 40. Progress: 8.0%.

Training R^2: 0.5165. Test R^2: 0.4516. Loss Train: 0.0260. Loss Val: 0.0370. Time: 7.8190

EPOCH 50. Progress: 10.0%.

Training R^2: 0.4289. Test R^2: 0.4077. Loss Train: 0.0289. Loss Val: 0.0379. Time: 2.4827

EPOCH 60. Progress: 12.0%.

Training R^2: 0.5101. Test R^2: 0.4489. Loss Train: 0.0254. Loss Val: 0.0353. Time: 2.3484

EPOCH 70. Progress: 14.000000000000002%.

Training R^2: 0.5097. Test R^2: 0.4530. Loss Train: 0.0268. Loss Val: 0.0355. Time: 3.0138

EPOCH 80. Progress: 16.0%.

Training R^2: 0.5224. Test R^2: 0.4542. Loss Train: 0.0260. Loss Val: 0.0354. Time: 2.6839

EPOCH 90. Progress: 18.0%.

Training R^2: 0.5271. Test R^2: 0.3807. Loss Train: 0.0260. Loss Val: 0.0349. Time: 1.8238

EPOCH 100. Progress: 20.0%.

Training R^2: 0.5101. Test R^2: 0.4279. Loss Train: 0.0261. Loss Val: 0.0359. Time: 2.3156

EPOCH 110. Progress: 22.0%.

Training R^2: 0.5306. Test R^2: 0.4605. Loss Train: 0.0258. Loss Val: 0.0345. Time: 1.8360

EPOCH 120. Progress: 24.0%.

Training R^2: 0.5264. Test R^2: 0.4653. Loss Train: 0.0254. Loss Val: 0.0367. Time: 2.4769

EPOCH 130. Progress: 26.0%.

Training R^2: 0.5018. Test R^2: 0.4304. Loss Train: 0.0263. Loss Val: 0.0352. Time: 2.2373

EPOCH 140. Progress: 28.000000000000004%.

Training R^2: 0.5286. Test R^2: 0.4372. Loss Train: 0.0258. Loss Val: 0.0349. Time: 2.5191

EPOCH 150. Progress: 30.0%.

Training R^2: 0.4869. Test R^2: 0.4419. Loss Train: 0.0272. Loss Val: 0.0362. Time: 2.7092

EPOCH 160. Progress: 32.0%.

Training R^2: 0.5121. Test R^2: 0.4195. Loss Train: 0.0263. Loss Val: 0.0374. Time: 2.3216

EPOCH 170. Progress: 34.0%.

Training R^2: 0.5133. Test R^2: 0.4503. Loss Train: 0.0263. Loss Val: 0.0357. Time: 2.1948

EPOCH 180. Progress: 36.0%.

Training R^2: 0.5382. Test R^2: 0.3948. Loss Train: 0.0274. Loss Val: 0.0352. Time: 2.3185

EPOCH 190. Progress: 38.0%.

Training R^2: 0.4829. Test R^2: 0.4170. Loss Train: 0.0268. Loss Val: 0.0367. Time: 2.4032

EPOCH 200. Progress: 40.0%.

Training R^2: 0.5256. Test R^2: 0.4373. Loss Train: 0.0262. Loss Val: 0.0350. Time: 2.3591

EPOCH 210. Progress: 42.0%.

Training R^2: 0.5272. Test R^2: 0.4283. Loss Train: 0.0256. Loss Val: 0.0354. Time: 2.1982

EPOCH 220. Progress: 44.0%.

Training R^2: 0.5143. Test R^2: 0.4589. Loss Train: 0.0259. Loss Val: 0.0345. Time: 3.1806

EPOCH 230. Progress: 46.0%.

Training R^2: 0.5401. Test R^2: 0.4181. Loss Train: 0.0261. Loss Val: 0.0343. Time: 2.5634

EPOCH 240. Progress: 48.0%.

Training R^2: 0.5014. Test R^2: 0.4760. Loss Train: 0.0255. Loss Val: 0.0352. Time: 2.0837

EPOCH 250. Progress: 50.0%.

Training R^2: 0.5255. Test R^2: 0.4414. Loss Train: 0.0268. Loss Val: 0.0347. Time: 1.9711

EPOCH 260. Progress: 52.0%.

Training R^2: 0.5492. Test R^2: 0.4560. Loss Train: 0.0256. Loss Val: 0.0350. Time: 2.4712

EPOCH 270. Progress: 54.0%.

Training R^2: 0.3971. Test R^2: 0.4073. Loss Train: 0.0258. Loss Val: 0.0380. Time: 1.8694

EPOCH 280. Progress: 56.00000000000001%.

Training R^2: 0.4833. Test R^2: 0.4405. Loss Train: 0.0252. Loss Val: 0.0342. Time: 1.9649

EPOCH 290. Progress: 57.99999999999999%.

Training R^2: 0.5295. Test R^2: 0.4399. Loss Train: 0.0255. Loss Val: 0.0349. Time: 1.8793

EPOCH 300. Progress: 60.0%.

Training R^2: 0.5335. Test R^2: 0.4543. Loss Train: 0.0255. Loss Val: 0.0347. Time: 2.0025

EPOCH 310. Progress: 62.0%.

Training R^2: 0.4871. Test R^2: 0.4498. Loss Train: 0.0256. Loss Val: 0.0348. Time: 1.9823

EPOCH 320. Progress: 64.0%.

Training R^2: 0.5254. Test R^2: 0.4363. Loss Train: 0.0265. Loss Val: 0.0346. Time: 2.0350

EPOCH 330. Progress: 66.0%.

Training R^2: 0.4671. Test R^2: 0.4699. Loss Train: 0.0263. Loss Val: 0.0348. Time: 2.0128

EPOCH 340. Progress: 68.0%.

Training R^2: 0.4482. Test R^2: 0.4442. Loss Train: 0.0263. Loss Val: 0.0365. Time: 1.9249

EPOCH 350. Progress: 70.0%.

Training R^2: 0.5402. Test R^2: 0.4583. Loss Train: 0.0253. Loss Val: 0.0346. Time: 2.1697

EPOCH 360. Progress: 72.0%.

Training R^2: 0.5130. Test R^2: 0.4348. Loss Train: 0.0281. Loss Val: 0.0350. Time: 1.9188

EPOCH 370. Progress: 74.0%.

Training R^2: 0.5427. Test R^2: 0.4000. Loss Train: 0.0257. Loss Val: 0.0349. Time: 1.9767

EPOCH 380. Progress: 76.0%.

Training R^2: 0.5321. Test R^2: 0.4589. Loss Train: 0.0261. Loss Val: 0.0366. Time: 1.8689

EPOCH 390. Progress: 78.0%.

Training R^2: 0.5216. Test R^2: 0.4523. Loss Train: 0.0263. Loss Val: 0.0352. Time: 1.7124

EPOCH 400. Progress: 80.0%.

Training R^2: 0.4896. Test R^2: 0.4235. Loss Train: 0.0262. Loss Val: 0.0367. Time: 1.9804

EPOCH 410. Progress: 82.0%.

Training R^2: 0.5069. Test R^2: 0.4444. Loss Train: 0.0251. Loss Val: 0.0351. Time: 2.0140

EPOCH 420. Progress: 84.0%.

Training R^2: 0.4853. Test R^2: 0.4509. Loss Train: 0.0262. Loss Val: 0.0361. Time: 1.9275

EPOCH 430. Progress: 86.0%.

Training R^2: 0.5214. Test R^2: 0.4328. Loss Train: 0.0255. Loss Val: 0.0358. Time: 1.9275

EPOCH 440. Progress: 88.0%.

Training R^2: 0.5252. Test R^2: 0.4525. Loss Train: 0.0254. Loss Val: 0.0357. Time: 2.0793

EPOCH 450. Progress: 90.0%.

Training R^2: 0.5405. Test R^2: 0.4435. Loss Train: 0.0254. Loss Val: 0.0339. Time: 1.9715

EPOCH 460. Progress: 92.0%.

Training R^2: 0.5270. Test R^2: 0.4243. Loss Train: 0.0266. Loss Val: 0.0346. Time: 1.9307

EPOCH 470. Progress: 94.0%.

Training R^2: 0.5364. Test R^2: 0.4669. Loss Train: 0.0254. Loss Val: 0.0343. Time: 2.1174

EPOCH 480. Progress: 96.0%.

Training R^2: 0.4949. Test R^2: 0.4394. Loss Train: 0.0261. Loss Val: 0.0339. Time: 1.9938

EPOCH 490. Progress: 98.0%.

Training R^2: 0.4509. Test R^2: 0.4009. Loss Train: 0.0257. Loss Val: 0.0414. Time: 1.9509

EPOCH 500. Progress: 100.0%.

Training R^2: 0.5098. Test R^2: 0.4527. Loss Train: 0.0258. Loss Val: 0.0337. Time: 2.0501

saving ./plots/lr0.01LONGER_momentum0.9_wdecay0.001_dampening0_nesterovFalse.png

[18]:

# Test

with torch.no_grad():

data, targets_val = next(iter(dataloaders['all_val']))

model_input = data.to(device)

predicted_val = model(model_input).squeeze()

# _, predicted = torch.max(out, 1)

print('predicted.shape: {}'.format(predicted_val.shape))

print('predicted[:20]: \t{}'.format(predicted_val[:20]))

print('targets[:20]: \t\t{}'.format(targets_val[:20]))

# Test

with torch.no_grad():

data, targets = next(iter(dataloaders['test']))

model_input = data.to(device)

predicted = model(model_input).squeeze()

print('predicted.shape: {}'.format(predicted.shape))

print('predicted[:20]: \t{}'.format(predicted[:20]))

print('targets[:20]: \t\t{}'.format(targets[:20]))

predicted.shape: torch.Size([335])

predicted[:20]: tensor([0.4197, 0.4249, 0.6119, 0.8562, 0.6343, 0.3911, 0.4445, 0.2016, 0.4953,

0.5134, 0.2582, 0.4030, 0.3671, 0.4673, 0.5299, 0.7208, 0.5424, 0.1291,

0.6937, 0.1303])

targets[:20]: tensor([0.3880, 0.2883, 0.7370, 0.9044, 0.5947, 0.5528, 0.3247, 0.1974, 0.5815,

0.8917, 0.3609, 0.3433, 0.3853, 0.5961, 0.9056, 0.7102, 0.6711, 0.0362,

0.5681, 0.2078])

predicted.shape: torch.Size([1118])

predicted[:20]: tensor([0.3200, 0.3313, 0.6222, 0.3567, 0.3359, 0.4297, 0.3794, 0.4444, 0.5455,

0.5131, 0.5163, 0.5323, 0.5762, 0.5698, 0.5697, 0.5701, 0.5701, 0.5715,

0.5823, 0.5200])

targets[:20]: tensor([0.0280, 0.1301, 0.2970, 0.2207, 0.2648, 0.3342, 0.2873, 0.3362, 0.6023,

0.5031, 0.5335, 0.6247, 0.5454, 0.4926, 0.4442, 0.5030, 0.5472, 0.5128,

0.5702, 0.5017])

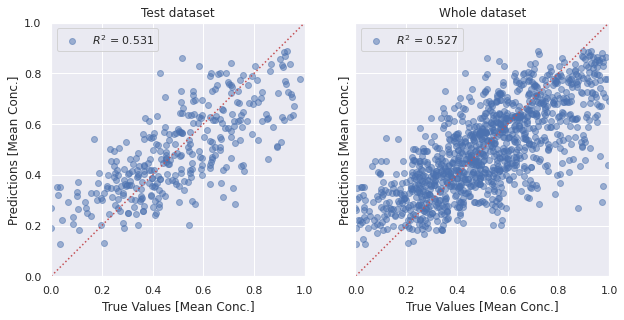

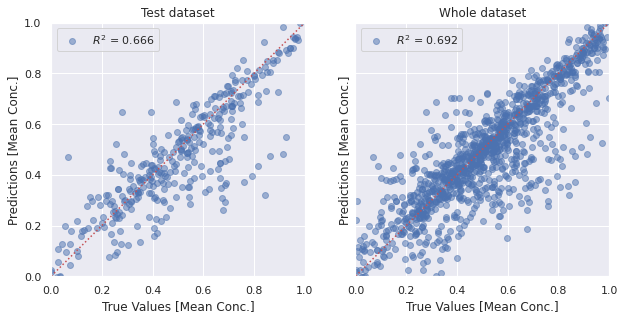

[20]:

#Time for a real test

path_to_save = './plots'

if not os.path.exists(path_to_save):

os.makedirs(path_to_save)

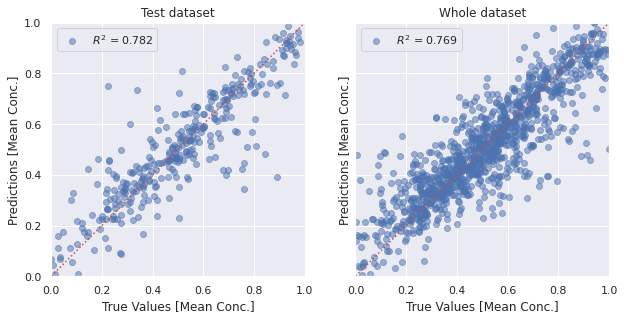

fig, (ax1,ax2) = plt.subplots(1,2, sharey=True)

# test_predictions = model(normed_test_data).flatten()

r = r2_score(targets_val, predicted_val.cpu())

ax1.scatter(targets_val, predicted_val.cpu(),alpha=0.5, label='$R^2$ = %.3f' % (r))

ax1.legend(loc="upper left")

ax1.set_xlabel('True Values [Mean Conc.]')

ax1.set_ylabel('Predictions [Mean Conc.]')

ax1.axis('equal')

ax1.axis('square')

ax1.set_xlim([0,1])

ax1.set_ylim([0,1])

_ = ax1.plot([-100, 100], [-100, 100], 'r:')

ax1.set_title('Test dataset')

fig.set_figheight(30)

fig.set_figwidth(10)

#Whole dataset

r = r2_score(targets, predicted.cpu())

ax2.scatter(targets, predicted.cpu(), alpha=0.5, label='$R^2$ = %.3f' % (r))

ax2.legend(loc="upper left")

ax2.set_xlabel('True Values [Mean Conc.]')

ax2.set_ylabel('Predictions [Mean Conc.]')

ax2.axis('equal')

ax2.axis('square')

ax2.set_xlim([0,1])

ax2.set_ylim([0,1])

_ = ax2.plot([-100, 100], [-100, 100], 'r:')

ax2.set_title('Whole dataset')

fig.savefig(os.path.join(path_to_save, 'LogisticReg_LONGER_R2Score_' + config_str + '.png'), bbox_inches='tight')

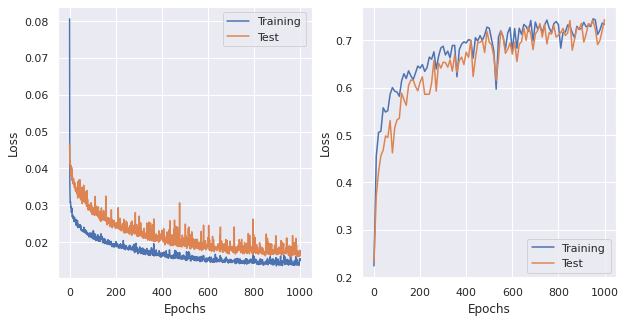

Question 1

What percentage classification accuracy did your simple network achieve? Make a table with the configurations you tested and results you obtained!

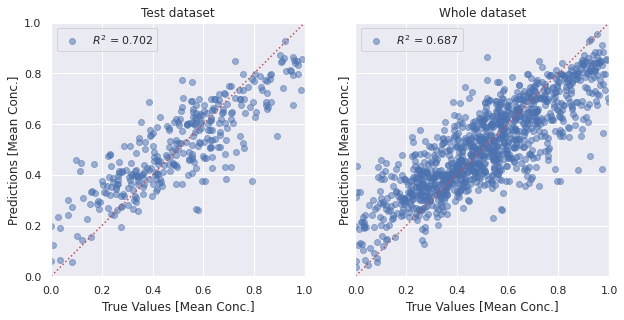

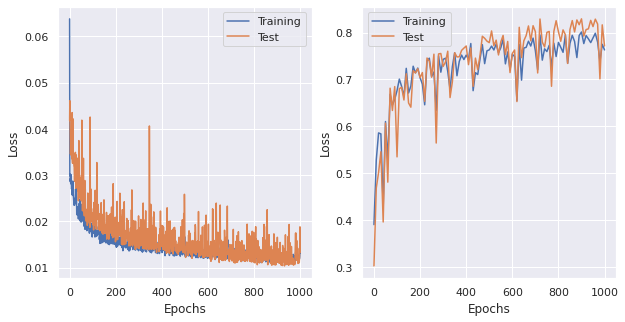

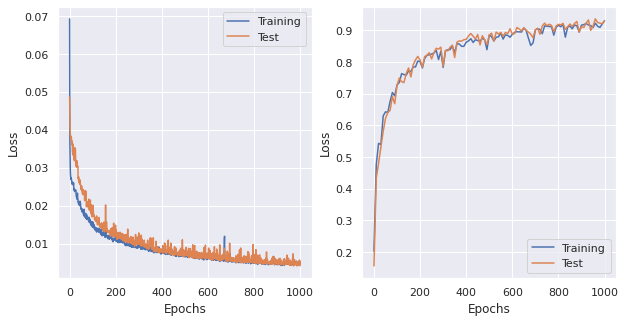

Feed-forward Neural Network

This time, keeping the rest of your logistic model fixed:

Create one hidden layer (with 128 units) between the input and output by creating another weight and bias variable.

Try training this without a non-linearity between the layers (linear activation), and then try adding a sigmoid non-linearity both before the hidden layer and after the hidden layer, recording your test accuracy results for each in a table.

Try adjusting the learning rate (by making it smaller) if your model is not onverging/improving accuracy. You might also try increasing the number of epochs used.

Experiment with the non-linearity used before the middle layer. Here are some activation functions to choose from: relu, softplus, elu, tanh.

Lastly, experiment with the width of the hidden layer, keeping the activation function that performs best. Remember to add these results to your table.

[21]:

class FeedForwardNet(nn.Module):

def __init__(self, in_dim,hid_dim,out_dim,verbose=False):

super(FeedForwardNet, self).__init__()

# Linear function

self.fc1 = nn.Linear(in_dim, hid_dim)

self.fc2 = nn.Linear(hid_dim, out_dim)

def forward(self, x):

# Linear function

out = self.fc1(x)

out = self.fc2(out)

return out

[22]:

# Best configuration for longer

lr_range = [0.001]

hid_dim_range = [64,128]

weight_decay_range = [0.001]

momentum_range = [0.9]

dampening_range = [0]

nesterov_range = [False]

for lr in lr_range:

for momentum in momentum_range:

for weight_decay in weight_decay_range:

for nesterov in nesterov_range:

for dampening in dampening_range:

for hid_dim in hid_dim_range:

try:

print('\nlr: {}, momentum: {}, weight_decay: {}, dampening: {}, nesterov: {} '.format(lr, momentum, weight_decay, dampening, nesterov))

model = FeedForwardNet(in_dim=47,hid_dim=hid_dim, out_dim=1, verbose=False).to(device)

print(model)

SGD = torch.optim.SGD(model.parameters(), lr = lr, momentum=momentum, dampening=dampening, weight_decay=weight_decay, nesterov=nesterov) # This is absurdly high.

# initialize the loss function. You don't want to use this one, so change it accordingly

loss_fn = nn.MSELoss()

config_str = 'lr' + str(lr) + 'FFNet_momentum' + str(momentum) + '_wdecay' + str(weight_decay) + '_dampening' + str(dampening) +'_nesterov' + str(nesterov) + '_HidDim' + str(hid_dim)

train(model,loss_fn, SGD, dataloaders['train'], dataloaders['val'], config_str,num_epochs=500, verbose=False)

except:

pass

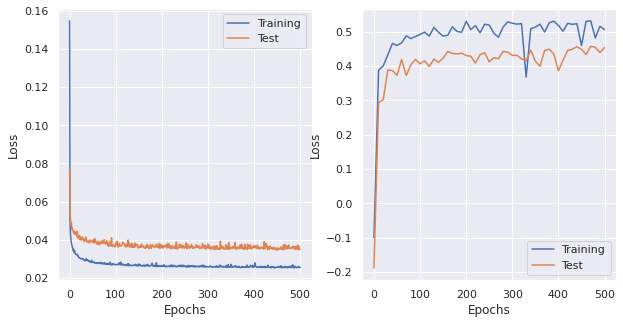

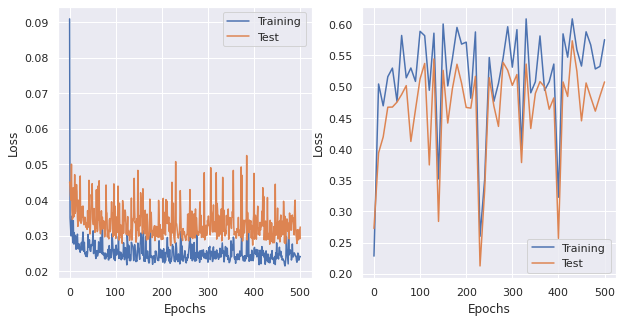

lr: 0.001, momentum: 0.9, weight_decay: 0.001, dampening: 0, nesterov: False

FeedForwardNet(

(fc1): Linear(in_features=47, out_features=64, bias=True)

(fc2): Linear(in_features=64, out_features=1, bias=True)

)

optimizer: SGD (

Parameter Group 0

dampening: 0

lr: 0.001

momentum: 0.9

nesterov: False

weight_decay: 0.001

)

n. of epochs: 500

EPOCH 0. Progress: 0.0%.

Training R^2: -0.1002. Test R^2: -0.1883. Loss Train: 0.1547. Loss Val: 0.0770. Time: 2.8679

EPOCH 10. Progress: 2.0%.

Training R^2: 0.3881. Test R^2: 0.2935. Loss Train: 0.0333. Loss Val: 0.0443. Time: 2.2752

EPOCH 20. Progress: 4.0%.

Training R^2: 0.4002. Test R^2: 0.3021. Loss Train: 0.0306. Loss Val: 0.0407. Time: 7.0543

EPOCH 30. Progress: 6.0%.

Training R^2: 0.4327. Test R^2: 0.3883. Loss Train: 0.0296. Loss Val: 0.0408. Time: 2.1320

EPOCH 40. Progress: 8.0%.

Training R^2: 0.4659. Test R^2: 0.3870. Loss Train: 0.0287. Loss Val: 0.0389. Time: 2.2508

EPOCH 50. Progress: 10.0%.

Training R^2: 0.4600. Test R^2: 0.3723. Loss Train: 0.0281. Loss Val: 0.0391. Time: 2.6312

EPOCH 60. Progress: 12.0%.

Training R^2: 0.4675. Test R^2: 0.4191. Loss Train: 0.0280. Loss Val: 0.0381. Time: 2.1675

EPOCH 70. Progress: 14.000000000000002%.

Training R^2: 0.4880. Test R^2: 0.3722. Loss Train: 0.0277. Loss Val: 0.0394. Time: 3.2555

EPOCH 80. Progress: 16.0%.

Training R^2: 0.4800. Test R^2: 0.4036. Loss Train: 0.0273. Loss Val: 0.0388. Time: 3.5889

EPOCH 90. Progress: 18.0%.

Training R^2: 0.4860. Test R^2: 0.4194. Loss Train: 0.0267. Loss Val: 0.0368. Time: 2.2345

EPOCH 100. Progress: 20.0%.

Training R^2: 0.4921. Test R^2: 0.4067. Loss Train: 0.0269. Loss Val: 0.0372. Time: 2.6351

EPOCH 110. Progress: 22.0%.

Training R^2: 0.4988. Test R^2: 0.4150. Loss Train: 0.0276. Loss Val: 0.0368. Time: 2.4887

EPOCH 120. Progress: 24.0%.

Training R^2: 0.4870. Test R^2: 0.3986. Loss Train: 0.0266. Loss Val: 0.0366. Time: 2.3648

EPOCH 130. Progress: 26.0%.

Training R^2: 0.5127. Test R^2: 0.4201. Loss Train: 0.0265. Loss Val: 0.0361. Time: 2.3501

EPOCH 140. Progress: 28.000000000000004%.

Training R^2: 0.4983. Test R^2: 0.4107. Loss Train: 0.0265. Loss Val: 0.0359. Time: 2.6944

EPOCH 150. Progress: 30.0%.

Training R^2: 0.4872. Test R^2: 0.4233. Loss Train: 0.0272. Loss Val: 0.0364. Time: 2.9972

EPOCH 160. Progress: 32.0%.

Training R^2: 0.4895. Test R^2: 0.4423. Loss Train: 0.0263. Loss Val: 0.0364. Time: 3.7616

EPOCH 170. Progress: 34.0%.

Training R^2: 0.5143. Test R^2: 0.4369. Loss Train: 0.0262. Loss Val: 0.0362. Time: 2.2601

EPOCH 180. Progress: 36.0%.

Training R^2: 0.5009. Test R^2: 0.4351. Loss Train: 0.0269. Loss Val: 0.0372. Time: 2.2206

EPOCH 190. Progress: 38.0%.

Training R^2: 0.4984. Test R^2: 0.4373. Loss Train: 0.0259. Loss Val: 0.0361. Time: 2.2813

EPOCH 200. Progress: 40.0%.

Training R^2: 0.5306. Test R^2: 0.4306. Loss Train: 0.0265. Loss Val: 0.0359. Time: 2.2075

EPOCH 210. Progress: 42.0%.

Training R^2: 0.5060. Test R^2: 0.4286. Loss Train: 0.0260. Loss Val: 0.0362. Time: 2.2509

EPOCH 220. Progress: 44.0%.

Training R^2: 0.5179. Test R^2: 0.4087. Loss Train: 0.0267. Loss Val: 0.0351. Time: 2.0607

EPOCH 230. Progress: 46.0%.

Training R^2: 0.4969. Test R^2: 0.4329. Loss Train: 0.0260. Loss Val: 0.0359. Time: 3.0709

EPOCH 240. Progress: 48.0%.

Training R^2: 0.5218. Test R^2: 0.4391. Loss Train: 0.0259. Loss Val: 0.0359. Time: 2.5126

EPOCH 250. Progress: 50.0%.

Training R^2: 0.5194. Test R^2: 0.4123. Loss Train: 0.0260. Loss Val: 0.0363. Time: 3.0602

EPOCH 260. Progress: 52.0%.

Training R^2: 0.4955. Test R^2: 0.4243. Loss Train: 0.0262. Loss Val: 0.0354. Time: 2.8255

EPOCH 270. Progress: 54.0%.

Training R^2: 0.4839. Test R^2: 0.4214. Loss Train: 0.0262. Loss Val: 0.0357. Time: 2.8350

EPOCH 280. Progress: 56.00000000000001%.

Training R^2: 0.5136. Test R^2: 0.4427. Loss Train: 0.0262. Loss Val: 0.0361. Time: 2.8567

EPOCH 290. Progress: 57.99999999999999%.

Training R^2: 0.5287. Test R^2: 0.4405. Loss Train: 0.0266. Loss Val: 0.0369. Time: 2.5230

EPOCH 300. Progress: 60.0%.

Training R^2: 0.5248. Test R^2: 0.4311. Loss Train: 0.0257. Loss Val: 0.0376. Time: 4.1712

EPOCH 310. Progress: 62.0%.

Training R^2: 0.5220. Test R^2: 0.4311. Loss Train: 0.0256. Loss Val: 0.0356. Time: 2.7101

EPOCH 320. Progress: 64.0%.

Training R^2: 0.5235. Test R^2: 0.4212. Loss Train: 0.0260. Loss Val: 0.0357. Time: 2.4286

EPOCH 330. Progress: 66.0%.

Training R^2: 0.3678. Test R^2: 0.4150. Loss Train: 0.0262. Loss Val: 0.0366. Time: 2.4606

EPOCH 340. Progress: 68.0%.

Training R^2: 0.5088. Test R^2: 0.4462. Loss Train: 0.0266. Loss Val: 0.0349. Time: 2.1945

EPOCH 350. Progress: 70.0%.

Training R^2: 0.5135. Test R^2: 0.4145. Loss Train: 0.0271. Loss Val: 0.0354. Time: 2.5988

EPOCH 360. Progress: 72.0%.

Training R^2: 0.5219. Test R^2: 0.3990. Loss Train: 0.0256. Loss Val: 0.0356. Time: 2.5520

EPOCH 370. Progress: 74.0%.

Training R^2: 0.4989. Test R^2: 0.4453. Loss Train: 0.0254. Loss Val: 0.0372. Time: 2.2263

EPOCH 380. Progress: 76.0%.

Training R^2: 0.5259. Test R^2: 0.4489. Loss Train: 0.0256. Loss Val: 0.0375. Time: 2.3282

EPOCH 390. Progress: 78.0%.

Training R^2: 0.5307. Test R^2: 0.4354. Loss Train: 0.0256. Loss Val: 0.0354. Time: 2.7453

EPOCH 400. Progress: 80.0%.

Training R^2: 0.5182. Test R^2: 0.3862. Loss Train: 0.0257. Loss Val: 0.0357. Time: 2.2663

EPOCH 410. Progress: 82.0%.

Training R^2: 0.5016. Test R^2: 0.4173. Loss Train: 0.0256. Loss Val: 0.0354. Time: 2.8625

EPOCH 420. Progress: 84.0%.

Training R^2: 0.5244. Test R^2: 0.4451. Loss Train: 0.0257. Loss Val: 0.0353. Time: 2.4082

EPOCH 430. Progress: 86.0%.

Training R^2: 0.5214. Test R^2: 0.4493. Loss Train: 0.0267. Loss Val: 0.0348. Time: 2.2367

EPOCH 440. Progress: 88.0%.

Training R^2: 0.5235. Test R^2: 0.4562. Loss Train: 0.0255. Loss Val: 0.0357. Time: 2.6790

EPOCH 450. Progress: 90.0%.

Training R^2: 0.4598. Test R^2: 0.4491. Loss Train: 0.0255. Loss Val: 0.0346. Time: 2.3281

EPOCH 460. Progress: 92.0%.

Training R^2: 0.5301. Test R^2: 0.4335. Loss Train: 0.0252. Loss Val: 0.0359. Time: 2.3309

EPOCH 470. Progress: 94.0%.

Training R^2: 0.5316. Test R^2: 0.4573. Loss Train: 0.0256. Loss Val: 0.0364. Time: 2.3654

EPOCH 480. Progress: 96.0%.

Training R^2: 0.4818. Test R^2: 0.4548. Loss Train: 0.0255. Loss Val: 0.0356. Time: 2.9039

EPOCH 490. Progress: 98.0%.

Training R^2: 0.5159. Test R^2: 0.4394. Loss Train: 0.0256. Loss Val: 0.0348. Time: 2.6864

EPOCH 500. Progress: 100.0%.

Training R^2: 0.5061. Test R^2: 0.4538. Loss Train: 0.0254. Loss Val: 0.0348. Time: 2.3089

saving ./plots/lr0.001FFNet_momentum0.9_wdecay0.001_dampening0_nesterovFalse_HidDim64.png

lr: 0.001, momentum: 0.9, weight_decay: 0.001, dampening: 0, nesterov: False

FeedForwardNet(

(fc1): Linear(in_features=47, out_features=128, bias=True)

(fc2): Linear(in_features=128, out_features=1, bias=True)

)

optimizer: SGD (

Parameter Group 0

dampening: 0

lr: 0.001

momentum: 0.9

nesterov: False

weight_decay: 0.001

)

n. of epochs: 500

EPOCH 0. Progress: 0.0%.

Training R^2: -0.1650. Test R^2: 0.1370. Loss Train: 0.1189. Loss Val: 0.0560. Time: 2.2674

EPOCH 10. Progress: 2.0%.

Training R^2: 0.3564. Test R^2: 0.3440. Loss Train: 0.0348. Loss Val: 0.0429. Time: 3.4910

EPOCH 20. Progress: 4.0%.

Training R^2: 0.4045. Test R^2: 0.3636. Loss Train: 0.0316. Loss Val: 0.0414. Time: 2.2355

EPOCH 30. Progress: 6.0%.

Training R^2: 0.4447. Test R^2: 0.3739. Loss Train: 0.0294. Loss Val: 0.0425. Time: 2.1547

EPOCH 40. Progress: 8.0%.

Training R^2: 0.4718. Test R^2: 0.3802. Loss Train: 0.0298. Loss Val: 0.0385. Time: 2.1527

EPOCH 50. Progress: 10.0%.

Training R^2: 0.4640. Test R^2: 0.4105. Loss Train: 0.0283. Loss Val: 0.0374. Time: 2.1591

EPOCH 60. Progress: 12.0%.

Training R^2: 0.4905. Test R^2: 0.4069. Loss Train: 0.0281. Loss Val: 0.0376. Time: 2.1030

EPOCH 70. Progress: 14.000000000000002%.

Training R^2: 0.4900. Test R^2: 0.3919. Loss Train: 0.0270. Loss Val: 0.0376. Time: 2.2291

EPOCH 80. Progress: 16.0%.

Training R^2: 0.4953. Test R^2: 0.4300. Loss Train: 0.0271. Loss Val: 0.0387. Time: 2.1138

EPOCH 90. Progress: 18.0%.

Training R^2: 0.4747. Test R^2: 0.4137. Loss Train: 0.0270. Loss Val: 0.0377. Time: 2.2466

EPOCH 100. Progress: 20.0%.

Training R^2: 0.5014. Test R^2: 0.4071. Loss Train: 0.0268. Loss Val: 0.0369. Time: 2.1457

EPOCH 110. Progress: 22.0%.

Training R^2: 0.5030. Test R^2: 0.4142. Loss Train: 0.0267. Loss Val: 0.0379. Time: 2.0818

EPOCH 120. Progress: 24.0%.

Training R^2: 0.4969. Test R^2: 0.4068. Loss Train: 0.0269. Loss Val: 0.0379. Time: 2.1583

EPOCH 130. Progress: 26.0%.

Training R^2: 0.5030. Test R^2: 0.4296. Loss Train: 0.0266. Loss Val: 0.0364. Time: 2.1612

EPOCH 140. Progress: 28.000000000000004%.

Training R^2: 0.5082. Test R^2: 0.4010. Loss Train: 0.0265. Loss Val: 0.0361. Time: 2.1903

EPOCH 150. Progress: 30.0%.

Training R^2: 0.5041. Test R^2: 0.4510. Loss Train: 0.0260. Loss Val: 0.0359. Time: 2.0836

EPOCH 160. Progress: 32.0%.

Training R^2: 0.5151. Test R^2: 0.4335. Loss Train: 0.0259. Loss Val: 0.0389. Time: 2.3125

EPOCH 170. Progress: 34.0%.

Training R^2: 0.4959. Test R^2: 0.4220. Loss Train: 0.0262. Loss Val: 0.0364. Time: 2.1887

EPOCH 180. Progress: 36.0%.

Training R^2: 0.4698. Test R^2: 0.4247. Loss Train: 0.0261. Loss Val: 0.0366. Time: 2.2226

EPOCH 190. Progress: 38.0%.

Training R^2: 0.5144. Test R^2: 0.3948. Loss Train: 0.0267. Loss Val: 0.0356. Time: 2.1010

EPOCH 200. Progress: 40.0%.

Training R^2: 0.5189. Test R^2: 0.4354. Loss Train: 0.0274. Loss Val: 0.0359. Time: 2.1634

EPOCH 210. Progress: 42.0%.

Training R^2: 0.5187. Test R^2: 0.4082. Loss Train: 0.0261. Loss Val: 0.0355. Time: 2.1772

EPOCH 220. Progress: 44.0%.

Training R^2: 0.4928. Test R^2: 0.4425. Loss Train: 0.0259. Loss Val: 0.0356. Time: 2.2574

EPOCH 230. Progress: 46.0%.

Training R^2: 0.5200. Test R^2: 0.4205. Loss Train: 0.0258. Loss Val: 0.0364. Time: 2.3135

EPOCH 240. Progress: 48.0%.

Training R^2: 0.5169. Test R^2: 0.3820. Loss Train: 0.0261. Loss Val: 0.0363. Time: 2.1045

EPOCH 250. Progress: 50.0%.

Training R^2: 0.5190. Test R^2: 0.4478. Loss Train: 0.0257. Loss Val: 0.0361. Time: 2.0785

EPOCH 260. Progress: 52.0%.

Training R^2: 0.5122. Test R^2: 0.4269. Loss Train: 0.0259. Loss Val: 0.0356. Time: 2.0899

EPOCH 270. Progress: 54.0%.

Training R^2: 0.5235. Test R^2: 0.4715. Loss Train: 0.0257. Loss Val: 0.0351. Time: 2.3125

EPOCH 280. Progress: 56.00000000000001%.

Training R^2: 0.4812. Test R^2: 0.4334. Loss Train: 0.0256. Loss Val: 0.0361. Time: 2.3766

EPOCH 290. Progress: 57.99999999999999%.

Training R^2: 0.5019. Test R^2: 0.4556. Loss Train: 0.0261. Loss Val: 0.0365. Time: 2.0649

EPOCH 300. Progress: 60.0%.

Training R^2: 0.5156. Test R^2: 0.4563. Loss Train: 0.0265. Loss Val: 0.0371. Time: 2.3816

EPOCH 310. Progress: 62.0%.

Training R^2: 0.5344. Test R^2: 0.4310. Loss Train: 0.0258. Loss Val: 0.0349. Time: 2.0712

EPOCH 320. Progress: 64.0%.

Training R^2: 0.5257. Test R^2: 0.4413. Loss Train: 0.0255. Loss Val: 0.0360. Time: 2.1857

EPOCH 330. Progress: 66.0%.

Training R^2: 0.4794. Test R^2: 0.4378. Loss Train: 0.0252. Loss Val: 0.0364. Time: 2.0993

EPOCH 340. Progress: 68.0%.

Training R^2: 0.5277. Test R^2: 0.4453. Loss Train: 0.0253. Loss Val: 0.0353. Time: 2.1207

EPOCH 350. Progress: 70.0%.

Training R^2: 0.5116. Test R^2: 0.4509. Loss Train: 0.0257. Loss Val: 0.0359. Time: 2.1579

EPOCH 360. Progress: 72.0%.

Training R^2: 0.5204. Test R^2: 0.4529. Loss Train: 0.0254. Loss Val: 0.0349. Time: 2.4573

EPOCH 370. Progress: 74.0%.

Training R^2: 0.5156. Test R^2: 0.4320. Loss Train: 0.0254. Loss Val: 0.0348. Time: 4.0994

EPOCH 380. Progress: 76.0%.

Training R^2: 0.5010. Test R^2: 0.4440. Loss Train: 0.0265. Loss Val: 0.0355. Time: 2.2201

EPOCH 390. Progress: 78.0%.

Training R^2: 0.4996. Test R^2: 0.4569. Loss Train: 0.0257. Loss Val: 0.0349. Time: 2.3245

EPOCH 400. Progress: 80.0%.

Training R^2: 0.5232. Test R^2: 0.4469. Loss Train: 0.0256. Loss Val: 0.0355. Time: 2.3519

EPOCH 410. Progress: 82.0%.

Training R^2: 0.5372. Test R^2: 0.4467. Loss Train: 0.0253. Loss Val: 0.0348. Time: 2.4484

EPOCH 420. Progress: 84.0%.

Training R^2: 0.5324. Test R^2: 0.4684. Loss Train: 0.0257. Loss Val: 0.0352. Time: 2.1310

EPOCH 430. Progress: 86.0%.

Training R^2: 0.4772. Test R^2: 0.4459. Loss Train: 0.0254. Loss Val: 0.0357. Time: 2.2095

EPOCH 440. Progress: 88.0%.

Training R^2: 0.5210. Test R^2: 0.4543. Loss Train: 0.0256. Loss Val: 0.0343. Time: 2.1805

EPOCH 450. Progress: 90.0%.

Training R^2: 0.5117. Test R^2: 0.4440. Loss Train: 0.0253. Loss Val: 0.0365. Time: 2.0739

EPOCH 460. Progress: 92.0%.

Training R^2: 0.5289. Test R^2: 0.4707. Loss Train: 0.0260. Loss Val: 0.0354. Time: 2.1470

EPOCH 470. Progress: 94.0%.

Training R^2: 0.5273. Test R^2: 0.4631. Loss Train: 0.0259. Loss Val: 0.0358. Time: 2.1357

EPOCH 480. Progress: 96.0%.

Training R^2: 0.5195. Test R^2: 0.4381. Loss Train: 0.0256. Loss Val: 0.0358. Time: 2.1766

EPOCH 490. Progress: 98.0%.

Training R^2: 0.5120. Test R^2: 0.4202. Loss Train: 0.0254. Loss Val: 0.0348. Time: 2.1652

EPOCH 500. Progress: 100.0%.

Training R^2: 0.5386. Test R^2: 0.4514. Loss Train: 0.0254. Loss Val: 0.0345. Time: 2.0892

saving ./plots/lr0.001FFNet_momentum0.9_wdecay0.001_dampening0_nesterovFalse_HidDim128.png

[23]:

# Testing a regular FFnet

class FeedforwardNeuralNetModel(nn.Module):

def __init__(self, in_dim, hid_dim, out_dim):

super(FeedforwardNeuralNetModel, self).__init__()

# Linear function

self.fc1 = nn.Linear(in_dim, hid_dim)

# Non-linearity

self.relu = nn.ReLU()

# Linear function (readout)

self.fc2 = nn.Linear(hid_dim, out_dim)

def forward(self, x):

# Linear function

out = self.fc1(x)

# Non-linearity

out = self.relu(out)

# Linear function (readout)

out = self.fc2(out)

return out

# Best configuration for longer

lr_range = [0.1,0.01]

hid_dim_range = [64,128]

weight_decay_range = [0.001]

momentum_range = [0.9]

dampening_range = [0]

nesterov_range = [False]

for lr in lr_range:

for momentum in momentum_range:

for weight_decay in weight_decay_range:

for nesterov in nesterov_range:

for dampening in dampening_range:

for hid_dim in hid_dim_range:

# try:

print('\nlr: {}, momentum: {}, weight_decay: {}, dampening: {}, nesterov: {} '.format(lr, momentum, weight_decay, dampening, nesterov))

model = FeedforwardNeuralNetModel(in_dim=47,hid_dim=hid_dim, out_dim=1).to(device)

print(model)

SGD = torch.optim.SGD(model.parameters(), lr = lr, momentum=momentum, dampening=dampening, weight_decay=weight_decay, nesterov=nesterov) # This is absurdly high.

# initialize the loss function. You don't want to use this one, so change it accordingly

loss_fn = nn.MSELoss()

config_str = 'lr' + str(lr) + 'FFNet_ReLU_momentum' + str(momentum) + '_wdecay' + str(weight_decay) + '_dampening' + str(dampening) +'_nesterov' + str(nesterov) + '_HidDim' + str(hid_dim)

train(model,loss_fn, SGD, dataloaders['train'], dataloaders['val'], config_str,num_epochs=500, verbose=False)

# except:

# pass

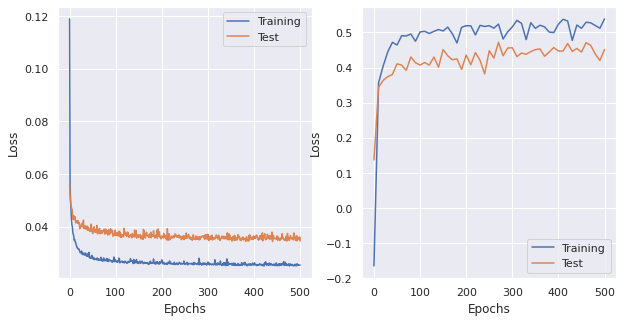

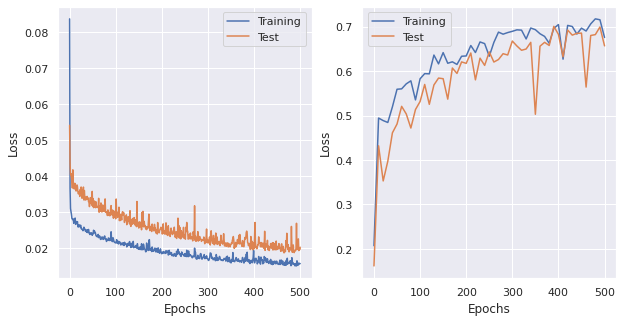

lr: 0.1, momentum: 0.9, weight_decay: 0.001, dampening: 0, nesterov: False

FeedforwardNeuralNetModel(

(fc1): Linear(in_features=47, out_features=64, bias=True)

(relu): ReLU()

(fc2): Linear(in_features=64, out_features=1, bias=True)

)

optimizer: SGD (

Parameter Group 0

dampening: 0

lr: 0.1

momentum: 0.9

nesterov: False

weight_decay: 0.001

)

n. of epochs: 500

EPOCH 0. Progress: 0.0%.

Training R^2: 0.3646. Test R^2: 0.3192. Loss Train: 0.0818. Loss Val: 0.0423. Time: 2.5027

EPOCH 10. Progress: 2.0%.

Training R^2: 0.1156. Test R^2: 0.0489. Loss Train: 0.0308. Loss Val: 0.0594. Time: 2.2376

EPOCH 20. Progress: 4.0%.

Training R^2: 0.5008. Test R^2: 0.3898. Loss Train: 0.0249. Loss Val: 0.0345. Time: 2.1767

EPOCH 30. Progress: 6.0%.

Training R^2: 0.4401. Test R^2: 0.4661. Loss Train: 0.0269. Loss Val: 0.0349. Time: 2.1309

EPOCH 40. Progress: 8.0%.

Training R^2: 0.3220. Test R^2: 0.4064. Loss Train: 0.0248. Loss Val: 0.0372. Time: 2.3498

EPOCH 50. Progress: 10.0%.

Training R^2: 0.5217. Test R^2: 0.4487. Loss Train: 0.0277. Loss Val: 0.0333. Time: 2.7613

EPOCH 60. Progress: 12.0%.

Training R^2: 0.4267. Test R^2: 0.3631. Loss Train: 0.0303. Loss Val: 0.0404. Time: 2.1412

EPOCH 70. Progress: 14.000000000000002%.

Training R^2: 0.5459. Test R^2: 0.4925. Loss Train: 0.0289. Loss Val: 0.0316. Time: 2.1796

EPOCH 80. Progress: 16.0%.

Training R^2: 0.5843. Test R^2: 0.5128. Loss Train: 0.0227. Loss Val: 0.0298. Time: 2.2113

EPOCH 90. Progress: 18.0%.

Training R^2: 0.5063. Test R^2: 0.4281. Loss Train: 0.0249. Loss Val: 0.0350. Time: 2.5134

EPOCH 100. Progress: 20.0%.

Training R^2: 0.5870. Test R^2: 0.5082. Loss Train: 0.0230. Loss Val: 0.0314. Time: 2.3755

EPOCH 110. Progress: 22.0%.

Training R^2: 0.5838. Test R^2: 0.5122. Loss Train: 0.0248. Loss Val: 0.0296. Time: 2.1351

EPOCH 120. Progress: 24.0%.

Training R^2: 0.5589. Test R^2: 0.4741. Loss Train: 0.0267. Loss Val: 0.0315. Time: 2.1909

EPOCH 130. Progress: 26.0%.

Training R^2: 0.5485. Test R^2: 0.4603. Loss Train: 0.0239. Loss Val: 0.0342. Time: 2.1711

EPOCH 140. Progress: 28.000000000000004%.

Training R^2: 0.5472. Test R^2: 0.5175. Loss Train: 0.0244. Loss Val: 0.0301. Time: 2.1524

EPOCH 150. Progress: 30.0%.

Training R^2: 0.5417. Test R^2: 0.5201. Loss Train: 0.0276. Loss Val: 0.0314. Time: 2.1584

EPOCH 160. Progress: 32.0%.

Training R^2: 0.5917. Test R^2: 0.5359. Loss Train: 0.0244. Loss Val: 0.0289. Time: 2.3367

EPOCH 170. Progress: 34.0%.

Training R^2: 0.5555. Test R^2: 0.4634. Loss Train: 0.0261. Loss Val: 0.0346. Time: 2.0601

EPOCH 180. Progress: 36.0%.

Training R^2: 0.4819. Test R^2: 0.3625. Loss Train: 0.0251. Loss Val: 0.0363. Time: 2.1442

EPOCH 190. Progress: 38.0%.

Training R^2: 0.4647. Test R^2: 0.3741. Loss Train: 0.0245. Loss Val: 0.0385. Time: 2.0878

EPOCH 200. Progress: 40.0%.

Training R^2: 0.5655. Test R^2: 0.4926. Loss Train: 0.0234. Loss Val: 0.0311. Time: 2.3180

EPOCH 210. Progress: 42.0%.

Training R^2: 0.5308. Test R^2: 0.4326. Loss Train: 0.0240. Loss Val: 0.0345. Time: 2.2221

EPOCH 220. Progress: 44.0%.

Training R^2: 0.5925. Test R^2: 0.5164. Loss Train: 0.0253. Loss Val: 0.0316. Time: 2.1547

EPOCH 230. Progress: 46.0%.

Training R^2: 0.5802. Test R^2: 0.4898. Loss Train: 0.0255. Loss Val: 0.0318. Time: 2.9204

EPOCH 240. Progress: 48.0%.

Training R^2: 0.5904. Test R^2: 0.5499. Loss Train: 0.0236. Loss Val: 0.0290. Time: 2.1554

EPOCH 250. Progress: 50.0%.

Training R^2: 0.5527. Test R^2: 0.5071. Loss Train: 0.0262. Loss Val: 0.0305. Time: 2.1597

EPOCH 260. Progress: 52.0%.

Training R^2: 0.5447. Test R^2: 0.4810. Loss Train: 0.0255. Loss Val: 0.0335. Time: 2.1949

EPOCH 270. Progress: 54.0%.

Training R^2: 0.5747. Test R^2: 0.4692. Loss Train: 0.0230. Loss Val: 0.0324. Time: 2.1299

EPOCH 280. Progress: 56.00000000000001%.

Training R^2: 0.5909. Test R^2: 0.5150. Loss Train: 0.0233. Loss Val: 0.0316. Time: 2.0958

EPOCH 290. Progress: 57.99999999999999%.

Training R^2: 0.5533. Test R^2: 0.4990. Loss Train: 0.0307. Loss Val: 0.0322. Time: 2.2280

EPOCH 300. Progress: 60.0%.

Training R^2: 0.5911. Test R^2: 0.5194. Loss Train: 0.0244. Loss Val: 0.0296. Time: 2.0876

EPOCH 310. Progress: 62.0%.

Training R^2: 0.5781. Test R^2: 0.5346. Loss Train: 0.0246. Loss Val: 0.0312. Time: 2.1579

EPOCH 320. Progress: 64.0%.

Training R^2: 0.5869. Test R^2: 0.4697. Loss Train: 0.0293. Loss Val: 0.0308. Time: 2.1631

EPOCH 330. Progress: 66.0%.

Training R^2: 0.5359. Test R^2: 0.5073. Loss Train: 0.0249. Loss Val: 0.0322. Time: 2.1336

EPOCH 340. Progress: 68.0%.

Training R^2: 0.3939. Test R^2: 0.3366. Loss Train: 0.0255. Loss Val: 0.0414. Time: 2.5117

EPOCH 350. Progress: 70.0%.

Training R^2: 0.5605. Test R^2: 0.5145. Loss Train: 0.0245. Loss Val: 0.0322. Time: 2.1713

EPOCH 360. Progress: 72.0%.

Training R^2: 0.5575. Test R^2: 0.4871. Loss Train: 0.0250. Loss Val: 0.0341. Time: 2.1935

EPOCH 370. Progress: 74.0%.

Training R^2: 0.5218. Test R^2: 0.4586. Loss Train: 0.0244. Loss Val: 0.0341. Time: 2.1901

EPOCH 380. Progress: 76.0%.

Training R^2: 0.3631. Test R^2: 0.2953. Loss Train: 0.0306. Loss Val: 0.0404. Time: 2.3216

EPOCH 390. Progress: 78.0%.

Training R^2: 0.5393. Test R^2: 0.4570. Loss Train: 0.0252. Loss Val: 0.0342. Time: 2.1549

EPOCH 400. Progress: 80.0%.

Training R^2: 0.5360. Test R^2: 0.4534. Loss Train: 0.0275. Loss Val: 0.0353. Time: 2.1271

EPOCH 410. Progress: 82.0%.

Training R^2: 0.5757. Test R^2: 0.4784. Loss Train: 0.0249. Loss Val: 0.0299. Time: 2.1469

EPOCH 420. Progress: 84.0%.

Training R^2: 0.5373. Test R^2: 0.5167. Loss Train: 0.0230. Loss Val: 0.0320. Time: 2.0999

EPOCH 430. Progress: 86.0%.

Training R^2: 0.5818. Test R^2: 0.4904. Loss Train: 0.0247. Loss Val: 0.0332. Time: 2.1413

EPOCH 440. Progress: 88.0%.

Training R^2: 0.4773. Test R^2: 0.4302. Loss Train: 0.0263. Loss Val: 0.0355. Time: 2.1924

EPOCH 450. Progress: 90.0%.

Training R^2: 0.4953. Test R^2: 0.4957. Loss Train: 0.0241. Loss Val: 0.0323. Time: 2.0620

EPOCH 460. Progress: 92.0%.

Training R^2: 0.5256. Test R^2: 0.5028. Loss Train: 0.0247. Loss Val: 0.0302. Time: 2.1600

EPOCH 470. Progress: 94.0%.

Training R^2: 0.3495. Test R^2: 0.4748. Loss Train: 0.0278. Loss Val: 0.0320. Time: 2.2276

EPOCH 480. Progress: 96.0%.

Training R^2: 0.4896. Test R^2: 0.4707. Loss Train: 0.0258. Loss Val: 0.0345. Time: 2.3685

EPOCH 490. Progress: 98.0%.

Training R^2: 0.6014. Test R^2: 0.5493. Loss Train: 0.0254. Loss Val: 0.0291. Time: 2.1611

EPOCH 500. Progress: 100.0%.

Training R^2: 0.5324. Test R^2: 0.5026. Loss Train: 0.0244. Loss Val: 0.0318. Time: 2.2871

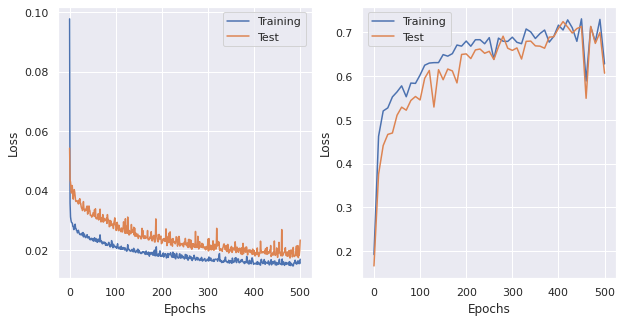

saving ./plots/lr0.1FFNet_ReLU_momentum0.9_wdecay0.001_dampening0_nesterovFalse_HidDim64.png

lr: 0.1, momentum: 0.9, weight_decay: 0.001, dampening: 0, nesterov: False

FeedforwardNeuralNetModel(

(fc1): Linear(in_features=47, out_features=128, bias=True)

(relu): ReLU()

(fc2): Linear(in_features=128, out_features=1, bias=True)

)

optimizer: SGD (

Parameter Group 0

dampening: 0

lr: 0.1

momentum: 0.9

nesterov: False

weight_decay: 0.001

)

n. of epochs: 500

EPOCH 0. Progress: 0.0%.

Training R^2: 0.2283. Test R^2: 0.2728. Loss Train: 0.0909. Loss Val: 0.0450. Time: 2.7173

EPOCH 10. Progress: 2.0%.

Training R^2: 0.5042. Test R^2: 0.3939. Loss Train: 0.0308. Loss Val: 0.0374. Time: 2.1023

EPOCH 20. Progress: 4.0%.

Training R^2: 0.4693. Test R^2: 0.4194. Loss Train: 0.0277. Loss Val: 0.0383. Time: 2.0253

EPOCH 30. Progress: 6.0%.

Training R^2: 0.5159. Test R^2: 0.4670. Loss Train: 0.0281. Loss Val: 0.0342. Time: 1.9902

EPOCH 40. Progress: 8.0%.

Training R^2: 0.5297. Test R^2: 0.4673. Loss Train: 0.0303. Loss Val: 0.0332. Time: 2.0638

EPOCH 50. Progress: 10.0%.

Training R^2: 0.4762. Test R^2: 0.4752. Loss Train: 0.0288. Loss Val: 0.0344. Time: 2.0154

EPOCH 60. Progress: 12.0%.

Training R^2: 0.5820. Test R^2: 0.4874. Loss Train: 0.0242. Loss Val: 0.0311. Time: 1.9918

EPOCH 70. Progress: 14.000000000000002%.

Training R^2: 0.5141. Test R^2: 0.5016. Loss Train: 0.0249. Loss Val: 0.0318. Time: 2.0654

EPOCH 80. Progress: 16.0%.

Training R^2: 0.5299. Test R^2: 0.4122. Loss Train: 0.0248. Loss Val: 0.0366. Time: 2.0807

EPOCH 90. Progress: 18.0%.

Training R^2: 0.5084. Test R^2: 0.4630. Loss Train: 0.0238. Loss Val: 0.0351. Time: 2.0694

EPOCH 100. Progress: 20.0%.

Training R^2: 0.5886. Test R^2: 0.5127. Loss Train: 0.0267. Loss Val: 0.0292. Time: 2.3217

EPOCH 110. Progress: 22.0%.

Training R^2: 0.5817. Test R^2: 0.5371. Loss Train: 0.0245. Loss Val: 0.0301. Time: 1.9418

EPOCH 120. Progress: 24.0%.

Training R^2: 0.4942. Test R^2: 0.3745. Loss Train: 0.0241. Loss Val: 0.0372. Time: 2.0107

EPOCH 130. Progress: 26.0%.

Training R^2: 0.5857. Test R^2: 0.5444. Loss Train: 0.0229. Loss Val: 0.0286. Time: 2.0223

EPOCH 140. Progress: 28.000000000000004%.

Training R^2: 0.3522. Test R^2: 0.2840. Loss Train: 0.0253. Loss Val: 0.0461. Time: 2.0770

EPOCH 150. Progress: 30.0%.

Training R^2: 0.6004. Test R^2: 0.5261. Loss Train: 0.0258. Loss Val: 0.0298. Time: 2.6616

EPOCH 160. Progress: 32.0%.

Training R^2: 0.5011. Test R^2: 0.4417. Loss Train: 0.0314. Loss Val: 0.0363. Time: 2.1031

EPOCH 170. Progress: 34.0%.

Training R^2: 0.5446. Test R^2: 0.4964. Loss Train: 0.0241. Loss Val: 0.0298. Time: 2.7993

EPOCH 180. Progress: 36.0%.

Training R^2: 0.5949. Test R^2: 0.5359. Loss Train: 0.0219. Loss Val: 0.0297. Time: 1.7726

EPOCH 190. Progress: 38.0%.

Training R^2: 0.5681. Test R^2: 0.5055. Loss Train: 0.0234. Loss Val: 0.0325. Time: 2.2046

EPOCH 200. Progress: 40.0%.

Training R^2: 0.5713. Test R^2: 0.4666. Loss Train: 0.0258. Loss Val: 0.0318. Time: 2.1275

EPOCH 210. Progress: 42.0%.

Training R^2: 0.4814. Test R^2: 0.4655. Loss Train: 0.0252. Loss Val: 0.0334. Time: 2.6702

EPOCH 220. Progress: 44.0%.

Training R^2: 0.5877. Test R^2: 0.5165. Loss Train: 0.0226. Loss Val: 0.0284. Time: 2.3608

EPOCH 230. Progress: 46.0%.

Training R^2: 0.2601. Test R^2: 0.2126. Loss Train: 0.0261. Loss Val: 0.0508. Time: 2.8814

EPOCH 240. Progress: 48.0%.

Training R^2: 0.3508. Test R^2: 0.3333. Loss Train: 0.0272. Loss Val: 0.0426. Time: 2.2305

EPOCH 250. Progress: 50.0%.

Training R^2: 0.5467. Test R^2: 0.5143. Loss Train: 0.0257. Loss Val: 0.0296. Time: 2.4325

EPOCH 260. Progress: 52.0%.

Training R^2: 0.4763. Test R^2: 0.4690. Loss Train: 0.0284. Loss Val: 0.0351. Time: 2.6164

EPOCH 270. Progress: 54.0%.

Training R^2: 0.5053. Test R^2: 0.4363. Loss Train: 0.0280. Loss Val: 0.0361. Time: 2.3808

EPOCH 280. Progress: 56.00000000000001%.

Training R^2: 0.5457. Test R^2: 0.5389. Loss Train: 0.0236. Loss Val: 0.0302. Time: 2.1011

EPOCH 290. Progress: 57.99999999999999%.

Training R^2: 0.5960. Test R^2: 0.5262. Loss Train: 0.0260. Loss Val: 0.0295. Time: 2.2730

EPOCH 300. Progress: 60.0%.

Training R^2: 0.5312. Test R^2: 0.5019. Loss Train: 0.0225. Loss Val: 0.0328. Time: 2.2471

EPOCH 310. Progress: 62.0%.

Training R^2: 0.5914. Test R^2: 0.5194. Loss Train: 0.0227. Loss Val: 0.0310. Time: 2.1525

EPOCH 320. Progress: 64.0%.

Training R^2: 0.4003. Test R^2: 0.3783. Loss Train: 0.0240. Loss Val: 0.0390. Time: 2.0833

EPOCH 330. Progress: 66.0%.

Training R^2: 0.6086. Test R^2: 0.5362. Loss Train: 0.0260. Loss Val: 0.0285. Time: 1.9955

EPOCH 340. Progress: 68.0%.

Training R^2: 0.4901. Test R^2: 0.4330. Loss Train: 0.0242. Loss Val: 0.0356. Time: 2.1022

EPOCH 350. Progress: 70.0%.

Training R^2: 0.5079. Test R^2: 0.4892. Loss Train: 0.0285. Loss Val: 0.0334. Time: 2.0113

EPOCH 360. Progress: 72.0%.

Training R^2: 0.5812. Test R^2: 0.5080. Loss Train: 0.0222. Loss Val: 0.0305. Time: 2.2167

EPOCH 370. Progress: 74.0%.

Training R^2: 0.4940. Test R^2: 0.4996. Loss Train: 0.0241. Loss Val: 0.0316. Time: 2.0167

EPOCH 380. Progress: 76.0%.

Training R^2: 0.5079. Test R^2: 0.4638. Loss Train: 0.0228. Loss Val: 0.0327. Time: 2.1083

EPOCH 390. Progress: 78.0%.

Training R^2: 0.5362. Test R^2: 0.4818. Loss Train: 0.0228. Loss Val: 0.0343. Time: 2.0472

EPOCH 400. Progress: 80.0%.

Training R^2: 0.3227. Test R^2: 0.2568. Loss Train: 0.0262. Loss Val: 0.0475. Time: 2.1222

EPOCH 410. Progress: 82.0%.

Training R^2: 0.5846. Test R^2: 0.5075. Loss Train: 0.0231. Loss Val: 0.0304. Time: 2.0978

EPOCH 420. Progress: 84.0%.

Training R^2: 0.5472. Test R^2: 0.4843. Loss Train: 0.0258. Loss Val: 0.0327. Time: 2.0564

EPOCH 430. Progress: 86.0%.

Training R^2: 0.6086. Test R^2: 0.5734. Loss Train: 0.0248. Loss Val: 0.0289. Time: 2.3157

EPOCH 440. Progress: 88.0%.

Training R^2: 0.5590. Test R^2: 0.5247. Loss Train: 0.0233. Loss Val: 0.0302. Time: 2.2981

EPOCH 450. Progress: 90.0%.

Training R^2: 0.5330. Test R^2: 0.4452. Loss Train: 0.0237. Loss Val: 0.0330. Time: 2.2508

EPOCH 460. Progress: 92.0%.

Training R^2: 0.5879. Test R^2: 0.5059. Loss Train: 0.0243. Loss Val: 0.0320. Time: 2.3504

EPOCH 470. Progress: 94.0%.

Training R^2: 0.5669. Test R^2: 0.4830. Loss Train: 0.0298. Loss Val: 0.0332. Time: 2.4113

EPOCH 480. Progress: 96.0%.

Training R^2: 0.5283. Test R^2: 0.4607. Loss Train: 0.0257. Loss Val: 0.0355. Time: 2.3665

EPOCH 490. Progress: 98.0%.

Training R^2: 0.5325. Test R^2: 0.4850. Loss Train: 0.0241. Loss Val: 0.0329. Time: 2.3898

EPOCH 500. Progress: 100.0%.

Training R^2: 0.5753. Test R^2: 0.5075. Loss Train: 0.0240. Loss Val: 0.0324. Time: 2.6969

saving ./plots/lr0.1FFNet_ReLU_momentum0.9_wdecay0.001_dampening0_nesterovFalse_HidDim128.png

lr: 0.01, momentum: 0.9, weight_decay: 0.001, dampening: 0, nesterov: False

FeedforwardNeuralNetModel(

(fc1): Linear(in_features=47, out_features=64, bias=True)

(relu): ReLU()

(fc2): Linear(in_features=64, out_features=1, bias=True)

)

optimizer: SGD (

Parameter Group 0

dampening: 0

lr: 0.01

momentum: 0.9

nesterov: False

weight_decay: 0.001

)

n. of epochs: 500

EPOCH 0. Progress: 0.0%.

Training R^2: 0.2071. Test R^2: 0.1615. Loss Train: 0.0837. Loss Val: 0.0541. Time: 5.2521

EPOCH 10. Progress: 2.0%.

Training R^2: 0.4945. Test R^2: 0.4320. Loss Train: 0.0276. Loss Val: 0.0365. Time: 2.5691

EPOCH 20. Progress: 4.0%.

Training R^2: 0.4887. Test R^2: 0.3529. Loss Train: 0.0265. Loss Val: 0.0354. Time: 2.6201

EPOCH 30. Progress: 6.0%.

Training R^2: 0.4845. Test R^2: 0.3973. Loss Train: 0.0252. Loss Val: 0.0370. Time: 3.0503

EPOCH 40. Progress: 8.0%.

Training R^2: 0.5197. Test R^2: 0.4612. Loss Train: 0.0244. Loss Val: 0.0340. Time: 2.6444

EPOCH 50. Progress: 10.0%.

Training R^2: 0.5593. Test R^2: 0.4810. Loss Train: 0.0240. Loss Val: 0.0317. Time: 2.2123

EPOCH 60. Progress: 12.0%.

Training R^2: 0.5602. Test R^2: 0.5208. Loss Train: 0.0232. Loss Val: 0.0313. Time: 2.2148

EPOCH 70. Progress: 14.000000000000002%.

Training R^2: 0.5714. Test R^2: 0.5037. Loss Train: 0.0232. Loss Val: 0.0301. Time: 2.3758

EPOCH 80. Progress: 16.0%.

Training R^2: 0.5782. Test R^2: 0.4720. Loss Train: 0.0219. Loss Val: 0.0300. Time: 2.3277

EPOCH 90. Progress: 18.0%.

Training R^2: 0.5353. Test R^2: 0.5136. Loss Train: 0.0246. Loss Val: 0.0319. Time: 2.3695

EPOCH 100. Progress: 20.0%.

Training R^2: 0.5828. Test R^2: 0.5317. Loss Train: 0.0217. Loss Val: 0.0282. Time: 2.6416

EPOCH 110. Progress: 22.0%.

Training R^2: 0.5944. Test R^2: 0.5701. Loss Train: 0.0214. Loss Val: 0.0278. Time: 2.5943