1.5. Emulating FLEXPART with a Multi-Layer Perceptron

1.5.1. Carlos Gómez-Ortiz

1.5.2. Department of Physical Geography and Ecosystem Science

1.5.3. Lund University

1.5.3.1. Abstract

1.5.4. Inverse modeling is a commonly used method and a formal approach to estimate the variables driving the evolution of a system, e.g. greenhouse gases (GHG) sources and sinks, based on the observable manifestations of that system, e.g. GHG concentrations in the atmosphere. This has been developed and applied for decades and it covers a wide range of techniques and mathematical approaches as well as topics in the field of the biogeochemistry. This implies the use of multiple models such as a CTM for generating background concentrations, a Lagrangian transport model to generate regional concentrations, and multiple flux models to generate prior emissions. All these models take several computational time besides the proper computational time of the inverse modeling. Replacing one of these steps with a tool that emulates its functioning but at a lower computational cost could facilitate testing and benchmarking tasks. LUMIA (Lund University Modular Inversion Algorithm) (Monteil & Scholze, 2019) is a variational atmospheric inverse modeling system developed within the regional European atmospheric transport inversion comparison (EUROCOM) project (Monteil et al., 2020) for optimizing terrestrial surface CO2 fluxes over Europe using ICOS in-situ observations. In this Jupyter Notebook, I will apply a AI tool to emulate the Lagrangian model FLEXPART to simulate the regional concentrations at one of the stations whitin the EUROCOM project.

1.5.4.1. Import packages

[43]:

import sys

sys.path.append('/home/carlos/Downloads/urlgrabber-4.0.0')

import os

from urlgrabber.grabber import URLGrabber

from netCDF4 import Dataset

from lumia import Tools, obsdb

from numpy import array, zeros, meshgrid, where, delete, unique, nan, ones, column_stack

from datetime import datetime, timedelta

from pandas import date_range, Timestamp

from matplotlib.pyplot import plot, subplots, subplots_adjust, legend, tight_layout

from seaborn import histplot

import cartopy

1.5.4.2. Download data

[3]:

def getFiles(folder, project, inv, files, dest='Downloads', force=False):#'observations.apri.tar.gz', 'observations.apos.tar.gz', 'modelData.apri.nc', 'modelData.apos.nc'], force=False):

dest = os.path.join(dest, inv)

if not os.path.exists(dest):

os.makedirs(dest)

with open(os.path.join(os.environ['HOME'],'.secrets/swestore'), 'r') as fid:

uname, pwd = fid.readline().split()

grab = URLGrabber(prefix=f'https://webdav.swestore.se/snic/tmflex/{folder}/{project}/{inv}'.encode(), username=uname, password=pwd)

for file in files :

if not os.path.exists(os.path.join(dest, file)) and not force :

print(grab.urlgrab(file, filename=f'{dest}/{file}'))

1.5.4.3. Retrieve data from swestore

[3]:

folder = 'FLEXPART'

# project = 'verify'

project = 'footprints'

inv = 'LPJGUESS_ERA5_hourly.200-g.1.0-e-monthly.weekly_'

# inv = 'eurocom05x05/verify'

files = ['htm.150m.2019-01.hdf']

# getFiles(folder, project, inv, files)

1.5.4.4. Plot fluxes and observations

1.5.4.4.1. Read the fluxes

[4]:

emis_apri = Dataset(os.path.join('Downloads', inv, 'modelData.apri.nc'))

emis_apos = Dataset(os.path.join('Downloads', inv, 'modelData.apos.nc'))

biosphere_apri = emis_apri['biosphere']['emis'][:]

biosphere_apos = emis_apos['biosphere']['emis'][:]

1.5.4.4.2. Read the observations

[5]:

db = obsdb(os.path.join('Downloads', inv, 'observations.apos.tar.gz'))

1.5.4.4.3. Determine the spatial and temporal coordinates

[6]:

reg = Tools.regions.region(latitudes=emis_apri['biosphere']['lats'][:], longitudes=emis_apri['biosphere']['lons'][:])

tstart = array([datetime(*x) for x in emis_apri['biosphere']['times_start']])

tend = array([datetime(*x) for x in emis_apri['biosphere']['times_end']])

dt = tend[0]-tstart[0]

1.5.4.4.4. Plot the observation sites

[53]:

f, ax = subplots(1, 1, subplot_kw=dict(projection=cartopy.crs.PlateCarree()), figsize=(20,20))

extent = (reg.lonmin, reg.lonmax, reg.latmin, reg.latmax)

lons, lats = meshgrid(reg.lons, reg.lats)

vmax = abs(bio_apri_monthly).max()*.75

bio_data = bio_apri_monthly[0, :, :]

bio_data[bio_data == 0.0] = nan

ax.coastlines()

ax.imshow(bio_data, extent=extent, cmap='GnBu', vmin=-vmax, vmax=vmax, origin='lower')

coord = zeros((33, 2))

site_i = []

for isite, site in enumerate(db.sites.itertuples()):

dbs = db.observations.loc[db.observations.site == site.Index]

coord[isite,:] = array((list(dbs.lon)[0], list(dbs.lat)[0]))

site_i.append(site.Index)

ax.scatter(coord[:,0],coord[:,1], s=50)

for i, txt in enumerate(site_i):

ax.annotate(txt, (coord[i,0], coord[i,1]))

plt.savefig('Map')

1.5.4.4.5. Compute monthly and daily fluxes in PgC

[7]:

biosphere_apri *= reg.area[None, :, :]*dt.total_seconds() * 44.e-6 * 1.e-15

biosphere_apos *= reg.area[None, :, :]*dt.total_seconds() * 44.e-6 * 1.e-15

months = array([t.month for t in tstart])

bio_apri_monthly = zeros((12, reg.nlat, reg.nlon))

bio_apos_monthly = zeros((12, reg.nlat, reg.nlon))

for month in range(12):

bio_apri_monthly[month, :, :] = biosphere_apri[months == month+1, :, :].sum(0)

bio_apos_monthly[month, :, :] = biosphere_apos[months == month+1, :, :].sum(0)

bios_apri_daily = biosphere_apri.reshape((-1, 24, reg.nlat, reg.nlon)).sum(1)

bios_apos_daily = biosphere_apos.reshape((-1, 24, reg.nlat, reg.nlon)).sum(1)

1.5.4.4.6. Plot daily fluxes aggregated over the domain

[10]:

f, ax = subplots(1, 1, figsize=(20, 4))

times = date_range(datetime(2018,1,1), datetime(2019,1,1), freq='d', closed='left')

ax.plot(times, bios_apri_daily.sum((1,2))/3600, label='apri')

ax.plot(times, bios_apos_daily.sum((1,2))/3600, label='apos')

ax.grid()

ax.legend()

[10]:

<matplotlib.legend.Legend at 0x7fab9d35f250>

1.5.4.4.7. Plot the fit to observations

[89]:

db = obsdb(os.path.join('Downloads', inv, 'observations.apos.tar.gz'))

nsites = db.sites.shape[0]

f, ax = subplots(nsites, 2, figsize=(20, 3.5*nsites), gridspec_kw={'width_ratios':[10, 2]})

for isite, site in enumerate(db.sites.itertuples()):

dbs = db.observations.loc[db.observations.site == site.Index]

ax[isite, 0].plot(dbs.time, dbs.obs, label='obs', marker='.', lw=0, color='k')

ax[isite, 0].plot(dbs.time, dbs.mix_apri, label='apri', marker='.', lw=0, color='MediumVioletRed', alpha=.5, ms=3)

ax[isite, 0].plot(dbs.time, dbs.mix_apos, label='apos', marker='.', lw=0, color='DodgerBlue', alpha=.5, ms=3)

ax[isite, 0].plot(dbs.time, dbs.mix_background, label='background', marker='.', lw=0, color='FireBrick', alpha=.5, ms=3)

ax[isite, 0].legend()

ax[isite, 0].set_title(site.name)

ax[isite, 0].set_xlim(db.observations.time.min(), db.observations.time.max())

histplot(y=dbs.mix_apri-dbs.obs, ax=ax[isite, 1], kde=True, label='apri', alpha=.2, color='MediumVioletRed', element='step', stat='probability')

histplot(y=dbs.mix_apos-dbs.obs, ax=ax[isite, 1], kde=True, label='apos', alpha=.2, color='DodgerBlue', element='step', stat='probability')

ax[isite, 1].legend()

ax[isite, 1].set_ylim(-15, 15)

ax[isite, 1].grid()

ax[isite, 0].grid()

tight_layout()

1.5.4.5. Modeling observations

1.5.4.5.1. Time-series

[54]:

%%time

dbs = db.observations.loc[db.observations.site == 'htm']

tid = list(emis_apri['biosphere']['times_start'])

for i in range(len(tid)):

tid[i] = datetime(*tid[i])

time_filled = []

for i in range(len(list(dbs.time))):

t = where((array(tid) >= Timestamp.to_pydatetime(list(dbs.time)[i])-timedelta(minutes=30)) & (array(tid) <= Timestamp.to_pydatetime(list(dbs.time)[i])+timedelta(minutes=30)))[0][0]

time_filled.append(t)

CPU times: user 1min 57s, sys: 675 ms, total: 1min 57s

Wall time: 1min 57s

[55]:

time_gap = delete(array(tid), time_filled, 0)

time_gap_plot = ones((len(time_gap)))*450

time_gap_plot = column_stack((time_gap,time_gap_plot))

f, ax = subplots(1, 1, figsize=(20, 3.5))

ax.plot(dbs.time, dbs.obs, label='obs', marker='.', lw=0, color='k')

ax.plot(time_gap_plot[:, 0], time_gap_plot[:, 1], label='gap', marker=',', lw=0, color='r')

ax.legend()

ax.set_title('Hyltemossa')

ax.set_xlim(db.observations.time.min(), db.observations.time.max())

plt.savefig('Gaps')

1.5.4.5.2. Preparing input data

[56]:

lat = where((array(emis_apos['biosphere']['lats']) >= list(dbs.lat)[0]-0.25) & (array(emis_apos['biosphere']['lats']) <= list(dbs.lat)[0]+0.25))[0][0]

lon = where((array(emis_apos['biosphere']['lons']) >= list(dbs.lon)[0]-0.25) & (array(emis_apos['biosphere']['lons']) <= list(dbs.lon)[0]+0.25))[0][0]

biosphere_apos_htm = emis_apos['biosphere']['emis'][:, lat, lon]

fossil_apos_htm = emis_apos['fossil']['emis'][:, lat, lon]

biosphere_apos_htm_nogap = biosphere_apos_htm[time_filled]

fossil_apos_htm_nogap = fossil_apos_htm[time_filled]

x = column_stack((biosphere_apos_htm_nogap, fossil_apos_htm_nogap))

d = array(dbs.mix_apos)

1.5.4.5.3. Importing additional packages

[57]:

from tensorflow.keras import backend as K

from tensorflow.keras import metrics, regularizers, optimizers

from tensorflow.keras.models import Model, Sequential, load_model

from tensorflow.keras.layers import Dense, Dropout, Flatten, Input, Activation

from tensorflow.keras.layers import TimeDistributed

from tensorflow.keras.layers import Lambda, concatenate

from tensorflow.keras.layers import LSTM, GRU, SimpleRNN, RNN

from tensorflow.keras.optimizers import SGD, Adam, RMSprop, Nadam

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error, r2_score

from keras.callbacks import EarlyStopping, ModelCheckpoint

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import random as rn

import scipy as sp

import tensorflow as tf

import tensorflow.keras as keras

import time

1.5.4.5.4. MLP model

[58]:

def pipline(inp_dim,

n_nod,

drop_nod,

act_fun = 'relu',

out_act_fun = 'sigmoid',

opt_method = 'Adam',

cost_fun = 'binary_crossentropy',

lr_rate = 0.01,

lambd = 0.0,

num_out = None):

lays = [inp_dim] + n_nod

main_input = Input(shape=(inp_dim,), dtype='float32', name='main_input')

X = main_input

for i, nod in enumerate(n_nod):

X = Dense(nod,

activation = act_fun,

kernel_regularizer=regularizers.l2(lambd))(X)

X = Dropout(drop_nod[i])(X)

output = Dense(num_out, activation = out_act_fun )(X)

method = getattr(optimizers, opt_method)

model = Model(inputs=[main_input], outputs=[output])

model.compile(optimizer = method(lr = lr_rate, clipnorm = 1.0),

loss = cost_fun,

metrics=['accuracy'])

return model

# Standardization function

def standard(comp_data, tv_data):

return (tv_data - np.mean(comp_data, axis=0))/np.std(comp_data, axis=0)

def de_standard(comp_data, pred_data):

return (pred_data * np.std(comp_data, axis=0)) + np.mean(comp_data, axis=0)

1.5.4.5.5. Function to calculate results

[59]:

def stats_reg(d = None, d_pred = None, label = 'Training', estimat = None):

A = ['MSE', 'CorrCoeff']

pcorr = np.corrcoef(d, d_pred)[1,0]

if label.lower() in ['training', 'trn', 'train']:

mse = estimat.history['loss'][-1]

else:

mse = estimat.history['val_loss'][-1]

B = [mse, pcorr]

print('\n','#'*10,'STATISTICS for {} Data'.format(label), '#'*10, '\n')

for r in zip(A,B):

print(*r, sep = ' ')

return print('\n','#'*50)

1.5.4.5.6. Generating training and validation datasets

[60]:

# Generate training and validation data

train_size = int(x.shape[0] * .75)

val_size = x.shape[0] - train_size

train_index = np.sort(np.random.choice(x.shape[0], train_size, replace=False))

x_train = x[[train_index]]

d_train = d[[train_index]]

x_val = np.delete(x, train_index, 0)

d_val = np.delete(d, train_index, 0)

# Standardization of both inputs and targets

x_train = standard(x, x_train)

x_val = standard(x, x_val)

d_train = standard(d, d_train)

d_val = standard(d, d_val)

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<ipython-input-60-fc2cd7ff4c1a>:8: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

d_train = d[[train_index]]

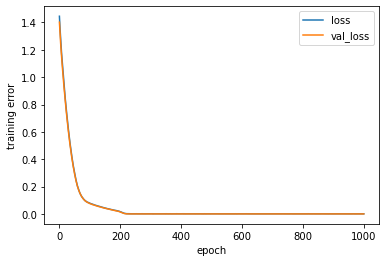

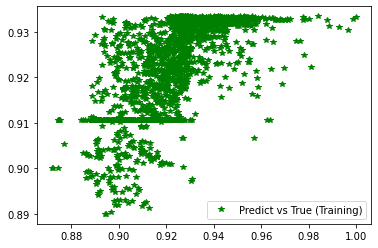

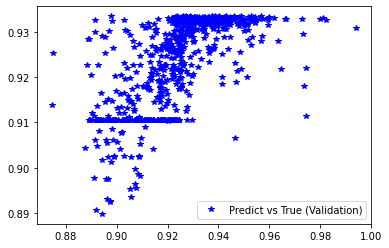

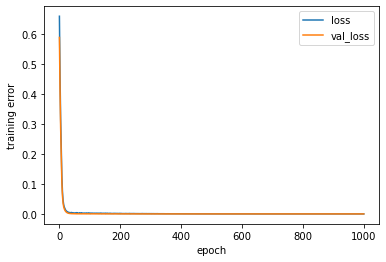

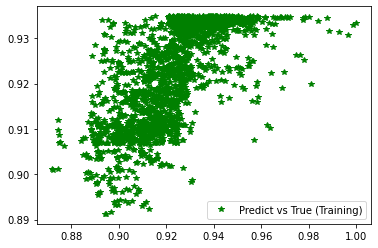

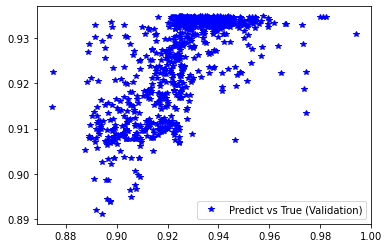

1.5.4.5.7. Training the MLP

[15]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [15], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.001, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, #Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=100, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

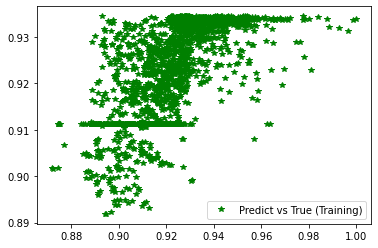

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

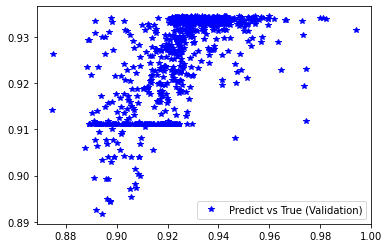

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

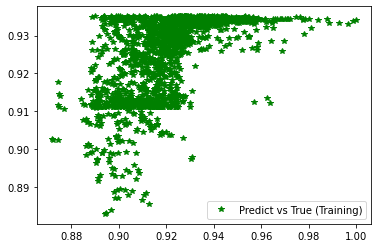

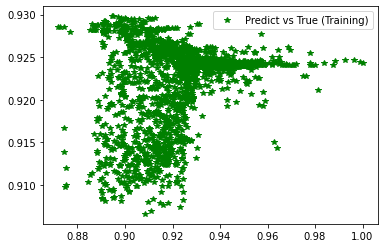

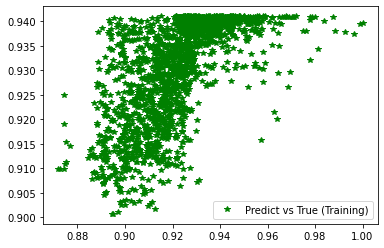

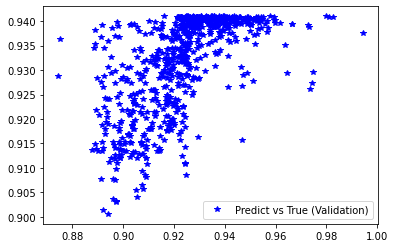

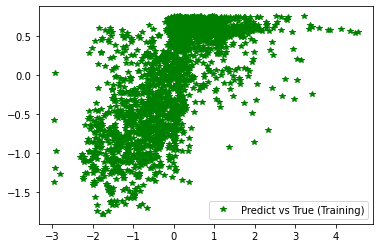

# Scatter plots of predicted and true values

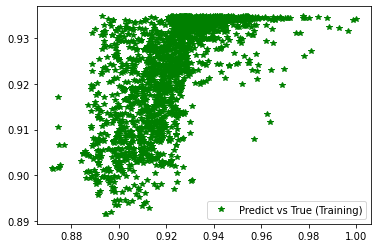

plt.figure('Training')

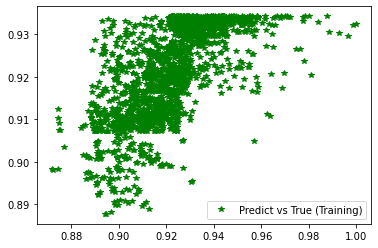

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

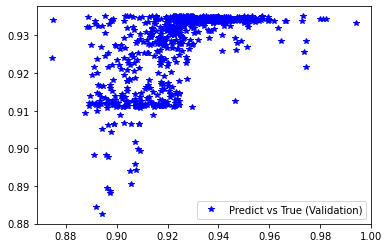

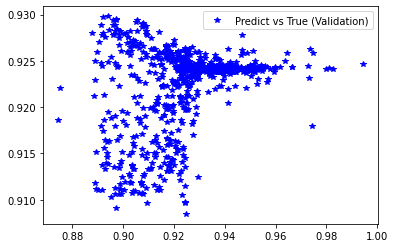

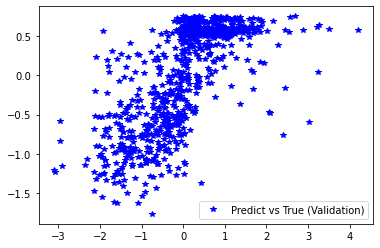

plt.figure('Validation')

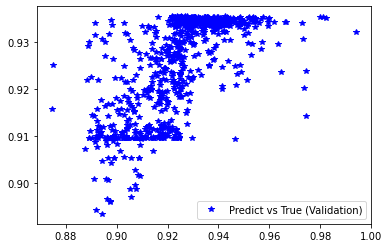

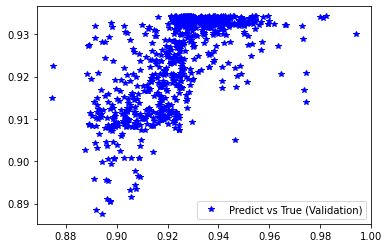

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

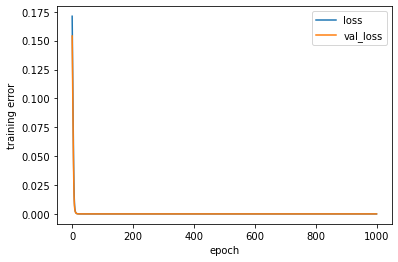

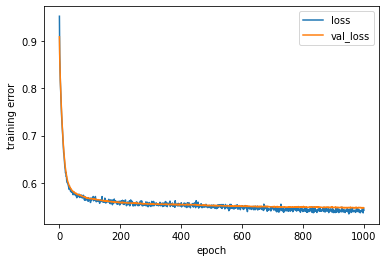

# Training history

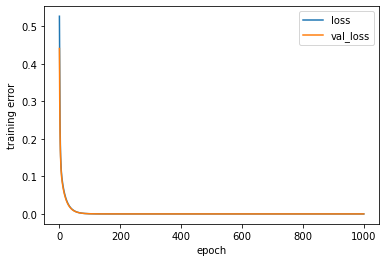

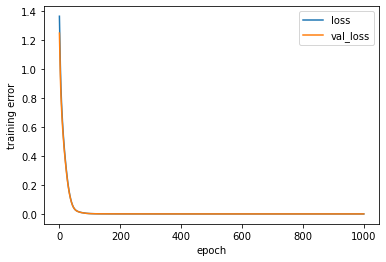

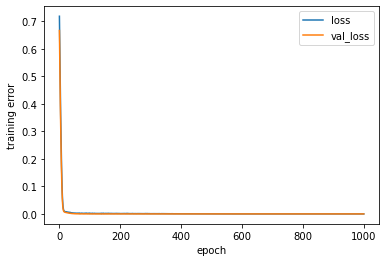

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

CPU times: user 9 µs, sys: 0 ns, total: 9 µs

Wall time: 15.5 µs

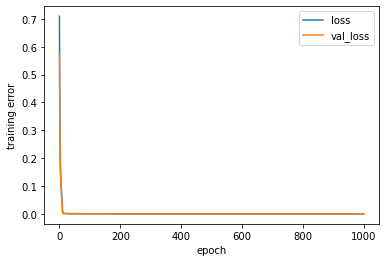

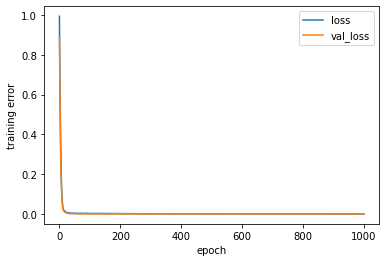

[231]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'tanh', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

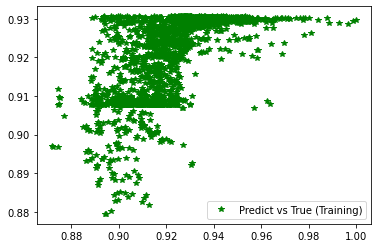

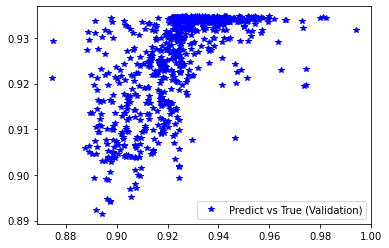

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

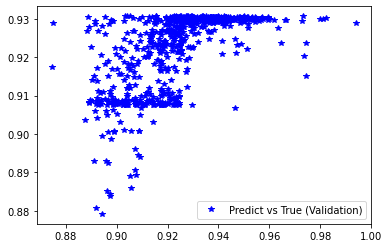

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

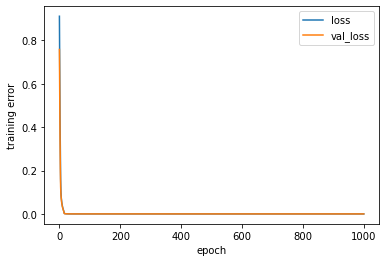

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_7"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_14 (Dense) (None, 3) 9

_________________________________________________________________

dropout_7 (Dropout) (None, 3) 0

_________________________________________________________________

dense_15 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00017876314814202487

CorrCoeff 0.612490603484116

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00019656501535791904

CorrCoeff 0.6010231101273652

##################################################

CPU times: user 29min 48s, sys: 53min 44s, total: 1h 23min 33s

Wall time: 3min 34s

[231]:

<matplotlib.legend.Legend at 0x7f40d4148f70>

[232]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'sigmoid', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_8"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_16 (Dense) (None, 3) 9

_________________________________________________________________

dropout_8 (Dropout) (None, 3) 0

_________________________________________________________________

dense_17 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00016998716455418617

CorrCoeff 0.6229274626422414

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.0001797070144675672

CorrCoeff 0.6126583323489367

##################################################

CPU times: user 28min 57s, sys: 51min 55s, total: 1h 20min 52s

Wall time: 3min 27s

[232]:

<matplotlib.legend.Legend at 0x7f41042b55e0>

[233]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'sigmoid', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_9"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_18 (Dense) (None, 3) 9

_________________________________________________________________

dropout_9 (Dropout) (None, 3) 0

_________________________________________________________________

dense_19 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00025753240333870053

CorrCoeff 0.28453889146907146

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.0002598793071229011

CorrCoeff 0.32232902575332284

##################################################

CPU times: user 29min 27s, sys: 52min 55s, total: 1h 22min 22s

Wall time: 3min 31s

[233]:

<matplotlib.legend.Legend at 0x7f4104622520>

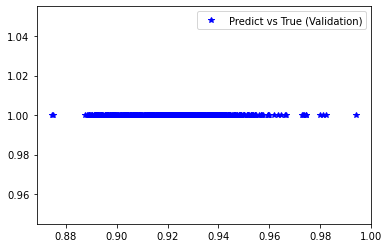

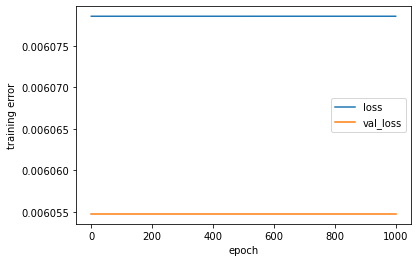

[234]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'softmax', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_10"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_20 (Dense) (None, 3) 9

_________________________________________________________________

dropout_10 (Dropout) (None, 3) 0

_________________________________________________________________

dense_21 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.006078554317355156

CorrCoeff nan

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.0060547417961061

CorrCoeff nan

##################################################

CPU times: user 30min 4s, sys: 53min 53s, total: 1h 23min 58s

Wall time: 3min 35s

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/lib/function_base.py:2642: RuntimeWarning: invalid value encountered in true_divide

c /= stddev[:, None]

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/lib/function_base.py:2643: RuntimeWarning: invalid value encountered in true_divide

c /= stddev[None, :]

[234]:

<matplotlib.legend.Legend at 0x7f414e98f1f0>

[235]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'SGD', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_11"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_22 (Dense) (None, 3) 9

_________________________________________________________________

dropout_11 (Dropout) (None, 3) 0

_________________________________________________________________

dense_23 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.0001678869448369369

CorrCoeff 0.629566898360391

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00017670282977633178

CorrCoeff 0.6177834055093928

##################################################

CPU times: user 29min, sys: 52min 7s, total: 1h 21min 7s

Wall time: 3min 27s

[235]:

<matplotlib.legend.Legend at 0x7f4104457400>

[236]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Nadam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_12"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_24 (Dense) (None, 3) 9

_________________________________________________________________

dropout_12 (Dropout) (None, 3) 0

_________________________________________________________________

dense_25 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00016082111687865108

CorrCoeff 0.65416212866746

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00017367156397085637

CorrCoeff 0.6334759651999986

##################################################

CPU times: user 29min 51s, sys: 53min 54s, total: 1h 23min 46s

Wall time: 3min 34s

[236]:

<matplotlib.legend.Legend at 0x7f40f5c47b80>

[237]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.0], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'RMSprop', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_13"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_26 (Dense) (None, 3) 9

_________________________________________________________________

dropout_13 (Dropout) (None, 3) 0

_________________________________________________________________

dense_27 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00021665332315023988

CorrCoeff 0.6523700632199257

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.0002438862866256386

CorrCoeff 0.6308466959417949

##################################################

CPU times: user 30min 18s, sys: 54min 16s, total: 1h 24min 34s

Wall time: 3min 40s

[237]:

<matplotlib.legend.Legend at 0x7f416e550ca0>

[238]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.01], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

batch_size = x_train.shape[0], # Batch size = all data (batch learning)

#batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_14"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_28 (Dense) (None, 3) 9

_________________________________________________________________

dropout_14 (Dropout) (None, 3) 0

_________________________________________________________________

dense_29 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.0011568532790988684

CorrCoeff 0.27274236652685657

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00026253453688696027

CorrCoeff 0.31293852699811564

##################################################

CPU times: user 26min 50s, sys: 43min 48s, total: 1h 10min 38s

Wall time: 3min 4s

[238]:

<matplotlib.legend.Legend at 0x7f40f5e03fa0>

[239]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.01], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.005, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_15"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_30 (Dense) (None, 3) 9

_________________________________________________________________

dropout_15 (Dropout) (None, 3) 0

_________________________________________________________________

dense_31 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00017078199016395956

CorrCoeff 0.6634442592016316

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00016517832409590483

CorrCoeff 0.6523983887574706

##################################################

CPU times: user 30min 2s, sys: 53min 44s, total: 1h 23min 46s

Wall time: 3min 35s

[239]:

<matplotlib.legend.Legend at 0x7f40f5b2e8b0>

[243]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [3], # number of nodes in hidden layer

'drop_nod': [.01], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.001, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_19"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_38 (Dense) (None, 3) 9

_________________________________________________________________

dropout_19 (Dropout) (None, 3) 0

_________________________________________________________________

dense_39 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00016062274517025799

CorrCoeff 0.6645116727126994

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.0001652294013183564

CorrCoeff 0.6536313767355278

##################################################

CPU times: user 29min 38s, sys: 53min 7s, total: 1h 22min 46s

Wall time: 3min 33s

[243]:

<matplotlib.legend.Legend at 0x7f40f6cab370>

[251]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [6], # number of nodes in hidden layer

'drop_nod': [.01], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.001, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_27"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_54 (Dense) (None, 6) 18

_________________________________________________________________

dropout_27 (Dropout) (None, 6) 0

_________________________________________________________________

dense_55 (Dense) (None, 1) 7

=================================================================

Total params: 25

Trainable params: 25

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00016617908840999007

CorrCoeff 0.668969350336334

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00016493901784997433

CorrCoeff 0.6560995009257078

##################################################

CPU times: user 29min 14s, sys: 52min 20s, total: 1h 21min 34s

Wall time: 3min 29s

[251]:

<matplotlib.legend.Legend at 0x7f4084637f10>

[252]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [9], # number of nodes in hidden layer

'drop_nod': [.01], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.001, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_28"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_56 (Dense) (None, 9) 27

_________________________________________________________________

dropout_28 (Dropout) (None, 9) 0

_________________________________________________________________

dense_57 (Dense) (None, 1) 10

=================================================================

Total params: 37

Trainable params: 37

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00015652619185857475

CorrCoeff 0.6705900837463853

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00016342980961780995

CorrCoeff 0.6556862691323897

##################################################

CPU times: user 30min 11s, sys: 54min 12s, total: 1h 24min 23s

Wall time: 3min 36s

[252]:

<matplotlib.legend.Legend at 0x7f40f5a0f160>

[254]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [12], # number of nodes in hidden layer

'drop_nod': [.01], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.001, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=150, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_30"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_60 (Dense) (None, 12) 36

_________________________________________________________________

dropout_30 (Dropout) (None, 12) 0

_________________________________________________________________

dense_61 (Dense) (None, 1) 13

=================================================================

Total params: 49

Trainable params: 49

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.00015669157437514514

CorrCoeff 0.6730087345978446

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.00016233550559263676

CorrCoeff 0.6582768498106989

##################################################

CPU times: user 29min 44s, sys: 53min 19s, total: 1h 23min 3s

Wall time: 3min 32s

[254]:

<matplotlib.legend.Legend at 0x7f4104127a00>

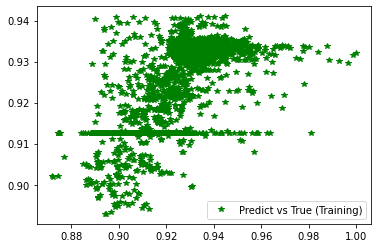

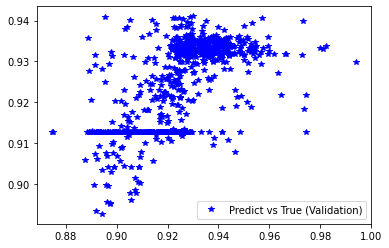

[61]:

%%time

# Define the network, cost function and minimization method

INPUT = {'inp_dim': x_train.shape[1],

'n_nod': [15], # number of nodes in hidden layer

'drop_nod': [.01], # fraction of the input units to drop

'act_fun': 'relu', # activation functions for the hidden layer

'out_act_fun': 'linear', # output activation function

'opt_method': 'Adam', # minimization method

'cost_fun': 'mse', # error function

'lr_rate': 0.001, # learningrate

'lambd' : 0.0, # L2

'num_out' : 1 } # if binary --> 1 | regression--> num output | multi-class--> num of classes

# Get the model

model_ex3 = pipline(**INPUT)

# Print a summary of the model

model_ex3.summary()

# Train the model

estimator_ex3 = model_ex3.fit(x_train,

d_train,

epochs = 1000, # Number of epochs

validation_data=(x_val,d_val),

#batch_size = x_train.shape[0], # Batch size = all data (batch learning)

batch_size=100, # Batch size for true SGD

verbose = 0)

# Call the stats function to print out statistics for classification problems

pred_trn = model_ex3.predict(x_train).reshape(d_train.shape)

pred_val = model_ex3.predict(x_val).reshape(d_val.shape)

stats_reg(d_train, pred_trn, 'Training', estimator_ex3)

stats_reg(d_val, pred_val, 'Validation', estimator_ex3)

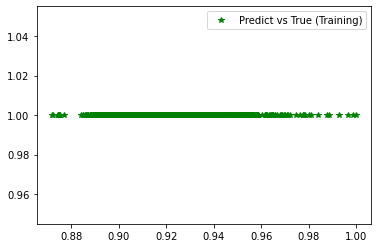

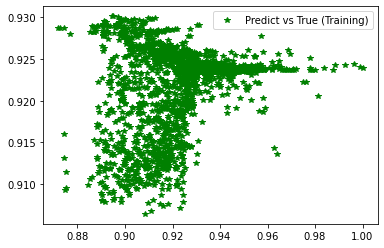

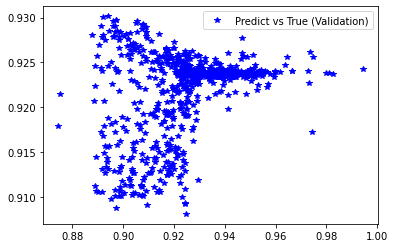

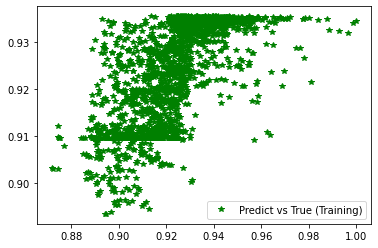

# Scatter plots of predicted and true values

plt.figure('Training')

plt.plot(d_train, pred_trn, 'g*', label='Predict vs True (Training)')

plt.legend()

plt.figure('Validation')

plt.plot(d_val, pred_val, 'b*', label='Predict vs True (Validation)')

plt.legend()

# Training history

plt.figure('Model training')

plt.ylabel('training error')

plt.xlabel('epoch')

for k in ['loss', 'val_loss']:

plt.plot(estimator_ex3.history[k], label = k)

plt.legend(loc='best')

Model: "model_33"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

main_input (InputLayer) [(None, 2)] 0

_________________________________________________________________

dense_66 (Dense) (None, 15) 45

_________________________________________________________________

dropout_33 (Dropout) (None, 15) 0

_________________________________________________________________

dense_67 (Dense) (None, 1) 16

=================================================================

Total params: 61

Trainable params: 61

Non-trainable params: 0

_________________________________________________________________

########## STATISTICS for Training Data ##########

MSE 0.5419843792915344

CorrCoeff 0.6782207027684092

##################################################

########## STATISTICS for Validation Data ##########

MSE 0.5472683906555176

CorrCoeff 0.6924202708728275

##################################################

CPU times: user 34min 48s, sys: 1h 4min 43s, total: 1h 39min 32s

Wall time: 4min 15s

[61]:

<matplotlib.legend.Legend at 0x7f4a18f3eee0>

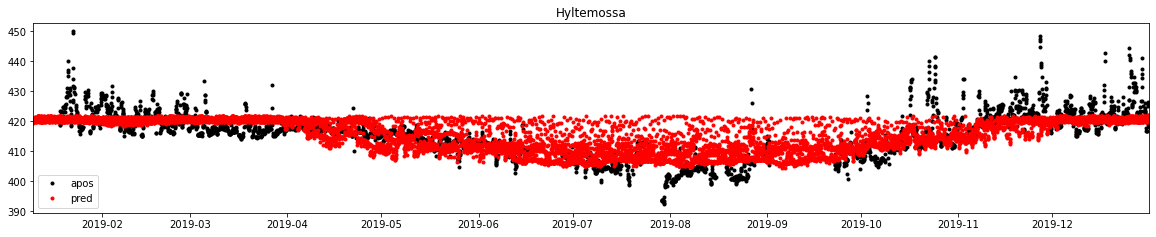

1.5.4.5.8. Plotting results

[25]:

%%time

x_comp = np.column_stack((biosphere_apos_htm, fossil_apos_htm))

x_std = standard(x, x_comp)

d_pred = model_ex3.predict(x_std).reshape(biosphere_apos_htm.shape)

d_pred_dstd = de_standard(d, d_pred)

f, ax = subplots(1, 1, figsize=(20, 3.5))

ax.plot(dbs.time, dbs.mix_apos, label='apos', marker='.', lw=0, color='k')

ax.plot(tid, d_pred_dstd, label='pred', marker='.', lw=0, color='r')

ax.legend()

ax.set_title('Hyltemossa')

ax.set_xlim(db.observations.time.min(), db.observations.time.max())

CPU times: user 7.38 s, sys: 13.9 s, total: 21.3 s

Wall time: 991 ms

[25]:

(17905.958333333332, 18261.958333333332)

[27]:

%%time

x_std = standard(x, x)

d_pred = model_ex3.predict(x_std).reshape(biosphere_apos_htm_nogap.shape)

d_pred_dstd = de_standard(d, d_pred)

f, ax = subplots(1, 1, figsize=(20, 3.5))

ax.plot(dbs.time, dbs.mix_apos, label='apos', marker='.', lw=0, color='k')

ax.plot(dbs.time, d_pred_dstd, label='pred', marker='.', lw=0, color='r')

ax.legend()

ax.set_title('Hyltemossa')

ax.set_xlim(db.observations.time.min(), db.observations.time.max())

plt.savefig()

CPU times: user 3.45 s, sys: 6.88 s, total: 10.3 s

Wall time: 500 ms

[27]:

(17905.958333333332, 18261.958333333332)

1.5.4.5.9. Training MLPs for all sites

[16]:

%%time

for isite, site in enumerate(db.sites.itertuples()):

dbs = db.observations.loc[db.observations.site == site.Index]

lat = np.where((np.array(emis_apos['biosphere']['lats']) >= list(dbs.lat)[0]-0.25) & (np.array(emis_apos['biosphere']['lats']) <= list(dbs.lat)[0]+0.25))[0][0]

lon = np.where((np.array(emis_apos['biosphere']['lons']) >= list(dbs.lon)[0]-0.25) & (np.array(emis_apos['biosphere']['lons']) <= list(dbs.lon)[0]+0.25))[0][0]

biosphere_apos = emis_apos['biosphere']['emis'][:, lat, lon]

fossil_apos = emis_apos['fossil']['emis'][:, lat, lon]

time_filled = []

for i in range(len(list(dbs.time))):

t = where((array(tid) >= Timestamp.to_pydatetime(list(dbs.time)[i])-timedelta(minutes=30)) & (array(tid) <= Timestamp.to_pydatetime(list(dbs.time)[i])+timedelta(minutes=30)))[0][0]

time_filled.append(t)

biosphere_apos_nogap = biosphere_apos[time_filled]

fossil_apos_nogap = fossil_apos[time_filled]

x = column_stack((biosphere_apos_nogap, fossil_apos_nogap))

d = array(dbs.mix_apos)

# Generate training and validation data

train_size = int(x.shape[0] * .75)

val_size = x.shape[0] - train_size

train_index = np.sort(np.random.choice(x.shape[0], train_size, replace=False))

x_train = x[[train_index]]

d_train = d[[train_index]]

x_val = np.delete(x, train_index, 0)

d_val = np.delete(d, train_index, 0)

# Standardization of both inputs and targets

x_train = standard(x, x_train)

x_val = standard(x, x_val)

d_train = standard(d, d_train)

d_val = standard(d, d_val)

#MLP training

model = pipline(**INPUT)

estimator = model.fit(x_train,

d_train,

epochs = 1000,

validation_data=(x_val,d_val),

batch_size=150,

verbose = 0)

model.save(os.path.join('Model', site.Index))

print(site.Index, isite)

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

bir 0

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

bsd 1

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

cmn 2

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dec 3

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dig 4

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

gat 5

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

hpb 6

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

htm 7

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

hun 8

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

ipr 9

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

jfj 10

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

kit 11

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

kre 12

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

lin 13

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

lmu 14

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

lut 15

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

nor 16

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

ope 17

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

oxk 18

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]

<timed exec>:30: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

pal 19

/home/carlos/miniconda3/envs/geocomp/lib/python3.9/site-packages/numpy/ma/core.py:3220: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

dout = self.data[indx]